What Is the Average Response Rate for Surveys?

Discover the average response rate for surveys, what influences it, and proven strategies to get more people to complete your next survey.

So, what’s a “good” survey response rate anyway?

The short answer is, it depends. A solid average response rate for surveys can be anywhere from 5% to 30%. But that number shifts dramatically depending on who you're asking and how you're asking them.

For example, internal surveys sent to employees often pull in response rates of 30% or more. They know you, they're invested, and it's part of the company culture. On the other hand, external surveys targeting customers or the general public might see rates closer to the 10-15% range, which is still a very respectable number.

What Defines a Good Survey Response Rate

Before we get too hung up on the numbers, let's be clear on what a survey response rate actually is. It’s the percentage of people who finished your survey out of the total number of people you invited. Simple as that.

There’s no magic number that screams "success" for every single survey. The real value in knowing the averages is setting realistic goals for yourself. It helps you figure out what's achievable and what's a stretch.

Think of it as a baseline. Knowing what to expect keeps you from feeling discouraged by a 15% response rate on a customer feedback survey when, in reality, that might be a huge win for your industry.

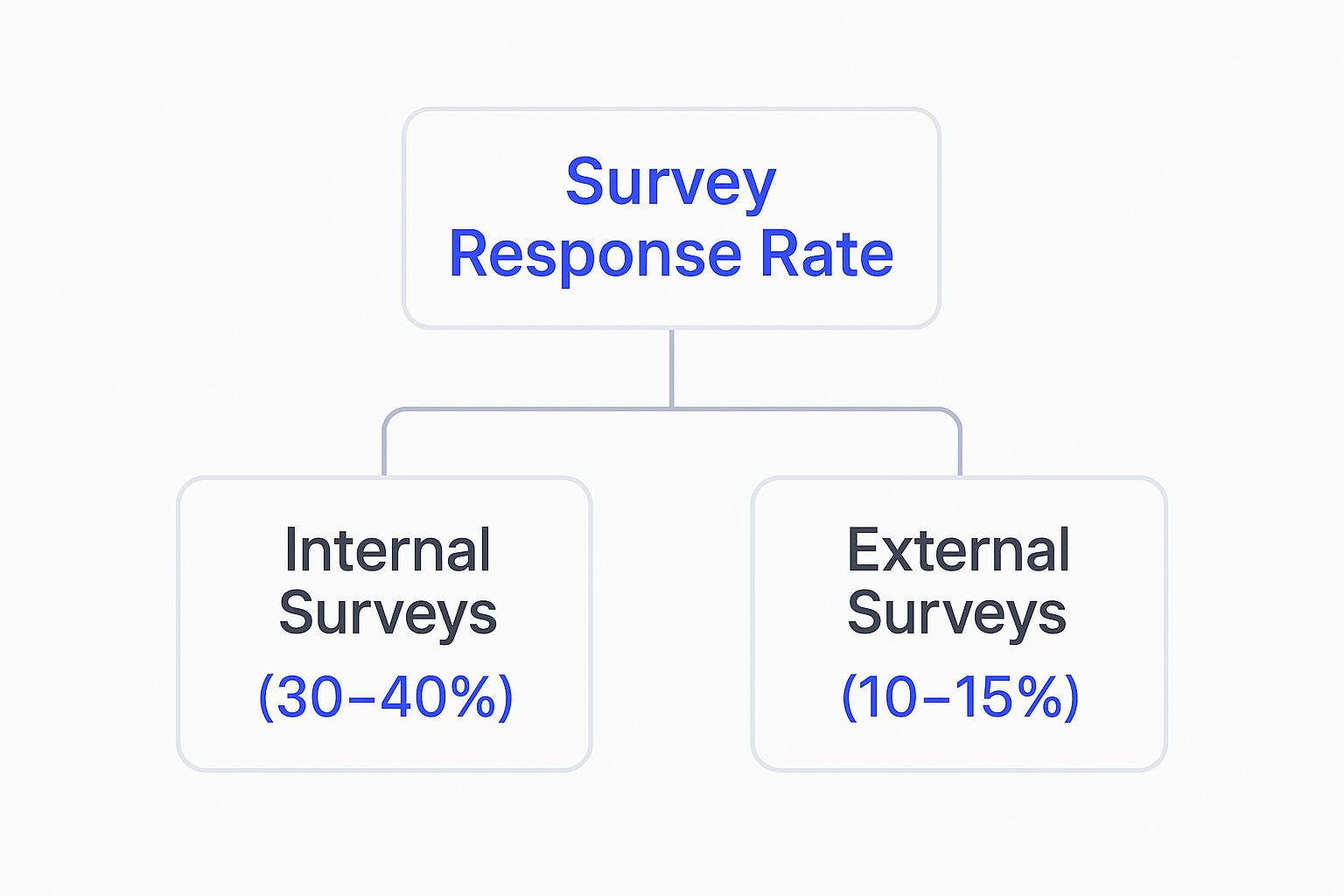

This handy visual breaks down how response rates typically look for the two main categories of surveys.

As you can see, it all comes down to your relationship with the audience. People you already have a connection with, like your internal teams, are almost always going to be more willing to give you their time.

The Elephant in the Room: Why Are Response Rates Declining?

It's not just you. Getting people to take surveys is genuinely harder than it used to be. Over the last couple of decades, participation has been on a steady downward slide. What was once seen as a helpful activity is now often viewed as just another interruption in a very busy day.

Just look at the data for telephone surveys. Response rates plummeted from 36% in 1997 to a mere 9% by 2016. This isn't a blip; it’s a major cultural shift. We're all dealing with survey fatigue, growing privacy concerns, and a general sense of distrust. You can dig into the full research about this sustained decline and its causes to see the bigger picture.

A good response rate is less about hitting a universal number and more about gathering enough quality feedback to make confident decisions. The goal is a representative sample, not just a high percentage.

Knowing this context is everything. It means our survey strategies have to work smarter, not just harder. We have to earn every single response by respecting people's time, showing them exactly why their feedback matters, and making the entire experience as painless as possible.

Exploring Different Survey Response Benchmarks

First things first: there’s no magic number for a “good” survey response rate. The metric isn't one-size-fits-all. The right benchmark really depends on who you're asking, what your relationship with them is like, and why you're asking in the first place.

Trying to compare your latest customer feedback survey to an internal employee poll is like comparing apples and oranges. The motivations are completely different, and so are the results you should expect. Let's break down the common benchmarks so you can set some realistic goals.

Internal vs. External Surveys

The biggest fork in the road for response rates is whether your survey is going out to your own team or to the outside world.

Internal Surveys (Employees): This is where you'll see the highest engagement. Response rates often land in the 30% to 40% range, and sometimes even higher. It makes sense, right? Employees have a vested interest in the company and believe their feedback might actually improve their day-to-day work life.

External Surveys (Customers): These are a tougher crowd. You can typically expect rates to fall between 10% and 15%. Your customers are busy people who are constantly being asked for feedback from dozens of companies. They just don't have the same built-in investment as your team.

It all boils down to the existing relationship. An employee survey feels like part of an internal conversation. A customer survey, on the other hand, is an outsider asking for a moment of their time. If you want to see how these numbers break down across different channels, we've got more details on survey response rate benchmarks.

B2B vs. B2C Surveys

The business context, whether you're talking to another business (B2B) or directly to a consumer (B2C), also shifts the goalposts.

When you send a B2B survey, you're usually reaching out to a professional contact you already have a working relationship with. If the feedback you're asking for can help them in their job, you might see better engagement. The catch? These professionals are incredibly busy, which can just as easily push your response rates down.

B2C surveys, which go out to individual consumers, are a different ballgame. They often hinge on recent experiences and brand loyalty. A customer who just had a fantastic (or terrible) interaction is highly motivated to respond, but getting feedback from the quiet majority is much harder. This makes B2C rates generally lower and more unpredictable than B2B.

At the end of the day, the strongest predictor of a good response rate is your relationship with the person you're surveying. An engaged, loyal customer is always more likely to give you their time than a passive one. The same goes for an invested employee versus one who's checked out. Keeping these nuances in mind is key to setting goals you can actually hit.

Key Factors That Influence Your Response Rate

Ever wonder why some surveys get flooded with replies while others just disappear into the void? It's a common frustration. The difference between a sky-high response rate and a dismal one often boils down to a handful of important elements.

Figuring out these factors is the first step to understanding why your own surveys might be falling flat. You need to create an experience that actually makes people want to respond. If a survey feels like a chore, you can bet most people will bail.

Let's break down each component, from the initial email invite to that final "submit" button, so you can spot the weak links and make some real improvements.

Survey Length and Complexity

Let's be honest: one of the biggest survey-killers is length. The moment a survey looks like it’s going to drag on, participation drops off a cliff. People are busy, and their time is valuable.

Research consistently shows that surveys taking less than 7 minutes to finish have the best shot. Once you start creeping past the 12-minute mark, you can expect a flood of drop-offs. The key is to keep your questions sharp and to the point.

- Keep it short: For quick pulse checks, stick to 1-3 questions. For more detailed reviews, aim for no more than 10-15.

- Keep it simple: Ditch the jargon. Use clear, straightforward language that doesn’t require a ton of mental gymnastics.

- Show progress: A simple progress bar works wonders. It lets people know where they stand and makes the whole thing feel less endless.

If you want to dig deeper into crafting questionnaires that people actually finish, check out our guide on survey design best practices.

Audience Relevance and Trust

Are you asking the right people the right questions? If your survey topic is completely irrelevant to the person receiving it, they’ll have zero reason to click. A little personalization goes a long way in showing them why their specific opinion is valuable.

Trust is the other side of that coin. People are rightfully wary about sharing their information if they don't trust the sender or understand how their data will be used. A professional, transparent invitation that clearly explains the "why" behind the survey can build the confidence you need to earn that click.

Your survey respondents are often your most engaged customers. They are giving you a signal of trust and an opportunity to build a stronger relationship, which goes far beyond just collecting data.

Figuring out why people ignore outreach is a huge piece of the puzzle. For example, exploring common reasons for low response rates in outreach can shed light on issues that apply directly to getting your surveys seen and answered.

Invitation and User Experience

Think of your survey invitation as the handshake. A boring, generic subject line will get you ignored before the conversation even starts. Personalizing the invite and clearly stating the value of their feedback can give your open rates a serious boost.

Finally, the user experience has to be buttery smooth, especially on mobile. We live on our phones. A survey that’s a nightmare to navigate on a small screen will be abandoned in seconds. Make sure your design is clean, responsive, and dead simple to use on any device.

When you nail these factors, you're improving your metrics. You're also showing people you respect their time, which makes them far more willing to share their valuable thoughts with you.

How Incentives and Design Can Make a Difference

Let's be honest, the average response rate for surveys isn't exactly climbing. Blasting out a questionnaire and hoping for the best is a strategy that's doomed to fail. Why? Because most surveys are a one-way street.

The most successful ones operate on a simple "give-get" model. When you offer people something valuable for their time, something that actually benefits them, they're far more likely to stick around and finish what they started.

This value doesn't always have to be a gift card or a discount code. In a professional setting, the best incentive is often exclusive information. Giving respondents a sneak peek at the final results or sharing unique data-driven insights can be a huge motivator.

This simple shift turns a tedious task into a genuine exchange of value. Participants no longer feel like they’re just giving away their time for free. They're part of a worthwhile collaboration.

The Power of a Clear Value Proposition

If you want to see this in action, look no further than the S&P Global Purchasing Managers’ Index (PMI) surveys. These surveys are legendary for their high engagement, maintaining an incredible average response rate of 73% between October 2023 and September 2024.

How do they do it? Their entire model is built on that "give-get" principle. The executives who participate get early access to important economic insights, data that directly helps them in their own roles. It's a clear win-win. This is a world away from many academic or government surveys that ask for time but offer nothing in return. You can read the full analysis on S&P Global's PMI survey performance to see just how effective this is.

The lesson here is simple: when people see a clear "what's in it for me," they show up. A strong value proposition isn't a nice extra. It's a fundamental tool for getting people to actually respond.

Designing Surveys People Want to Complete

Incentives get people in the door, but good design keeps them there. A clunky, confusing, or ridiculously long survey is a surefire way to lose people's attention fast. Every single question needs a purpose, and the whole experience should respect the user's time.

Here are a few design elements that make a world of difference:

- Clarity and Simplicity: Ditch the corporate jargon. Use straightforward language that anyone can understand. If a question is confusing, it's a bad question.

- Visual Progress: A simple progress bar is a small but mighty tool. It manages expectations and shows people the light at the end of the tunnel, which can dramatically reduce how many people drop off halfway through.

- Mobile-First Thinking: Most people are going to open your survey on their phone. It's just a fact. Make sure it looks good and works flawlessly on a small screen.

A well-designed survey feels less like an interrogation and more like a conversation. By focusing on the user's experience and offering real value, you're not just aiming for a better average response rate for surveys; you're building a stronger relationship with your audience.

You can't just copy-paste a survey strategy and expect it to work everywhere. What gets you amazing results in one country could totally bomb in another. The world is a big place, and things like cultural norms, trust in institutions, and even how people prefer to be contacted create a really complex picture.

If your entire survey plan hinges on email, for example, you might do great in one region but completely miss the boat somewhere else. Knowing these global quirks is mission-critical for anyone doing international research. It’s all about setting realistic goals and tweaking your approach to fit the local vibe.

It might surprise you, but old-school methods like postal or in-person surveys can still deliver incredibly high response rates in certain parts of the world, far higher than what we typically see in Western countries. This is a perfect example of how cultural attitudes directly shape whether people are willing to share their opinions.

Regional Response Rate Variations

Just how different are we talking? A look into global healthcare surveys uncovers some pretty stark contrasts. While we might be all-in on digital, some places are proving that traditional methods are still king.

For instance, India reports a stunning 93.3% average response rate from patients when using methods like postal or in-person questionnaires. You see similarly high numbers in Saudi Arabia and several African countries, where response rates often soar past 88%.

Now, compare that to the United States, which pulls in an average patient response rate of just 64.2% for similar surveys. It's a massive difference. You can explore the full regional breakdown of these survey findings to see just how much the numbers vary.

This data is a powerful reminder that a "one-size-fits-all" approach to surveys is a recipe for failure. The best method is always the one that makes sense for the cultural and technological reality of your audience.

So, Why The Huge Difference?

A few key factors are at play here, and getting a handle on them will help you build a much smarter, more effective global strategy.

- Trust in Institutions: In some cultures, people have a much higher level of trust in organizations like healthcare providers or government agencies. This makes them far more willing to participate when those institutions ask for their feedback.

- Communication Preferences: We might live in our email inboxes, but that's not universal. In other regions, people might strongly prefer face-to-face conversations, phone calls, or even SMS. If you ignore these preferences, you're leaving a ton of potential responses on the table.

- Cultural Norms: People's attitudes toward privacy and sharing personal opinions are wildly different across the globe. Some cultures see participating in a survey as a civic duty, while others are naturally more reserved or skeptical.

By simply acknowledging and respecting these global differences, you can design international survey campaigns that feel natural to the local audience and, as a result, get way more people to actually participate.

Practical Steps to Boost Your Survey Responses

Knowing what makes a good average response rate for surveys is one thing. Actually getting there is all about execution. To turn those concepts into real results, you have to think about the entire survey experience, from the moment someone sees your invitation to that final click on the "submit" button.

Every single step is a chance to either win a response or lose a potential participant for good. The whole game is about building trust and showing people you respect their time. Even small tweaks can lead to a huge lift in your numbers.

Write an Invitation They Can’t Ignore

Let's be honest: a generic, uninspired email is a one-way ticket to the trash folder. Your survey invitation is your first impression, and you need to make it count. The goal is to be clear, personal, and upfront about the value you're offering.

Start with a subject line that's engaging but not spammy. Instead of something dull like "Customer Feedback Survey," try writing something that sparks a little curiosity. It’s also a simple but powerful touch to personalize the greeting with the recipient's name.

Most importantly, tell them why you're asking. How will their feedback actually be used? Why does it matter? When people know their opinion can make a real difference, they're far more likely to share it.

Time Your Survey for Maximum Impact

When you send your survey matters just as much as what you send. Firing off an email at a random time is just leaving responses on the table. We’ve seen that most people tend to respond to surveys on weekdays, with Wednesday and Thursday often hitting the sweet spot.

The time of day makes a difference, too. Early morning before 10 AM and mid-afternoon between 2-3 PM are often peak times for engagement. A good rule of thumb is to avoid sending surveys during obviously stressful periods, like the end of a business quarter for B2B contacts or major holidays for consumers.

Your goal is to slide into their workflow naturally, not interrupt it. A well-timed survey feels like a relevant request, while a poorly timed one feels like a nuisance.

Sending a couple of polite, well-spaced reminders can also give your numbers a healthy bump. A common strategy is to send one or two follow-ups to non-responders, but just be careful not to overdo it and become annoying.

Nail Your Survey Design

A clean, user-friendly design is absolutely non-negotiable. If your survey is confusing, cluttered, or just plain hard to navigate, people will bail. No question.

Here are a few best practices to live by:

- Keep it Short and Sweet: Seriously. Aim for a completion time of under 5 minutes. The shorter the survey, the higher the completion rate.

- Use Clear Language: Ditch the industry jargon and overly complex wording. Questions should be simple and easy to understand at a glance.

- Make it Mobile-Friendly: A massive chunk of your audience will open your survey on their phone. It has to look good and be easy to use on a small screen.

- Show Their Progress: A simple progress bar is a small but mighty tool. It manages expectations and gives people that little psychological nudge to finish.

By putting these practical steps into action, you're not just asking for feedback. You're creating an experience that encourages people to participate. For a deeper dive, you can learn more about how to improve your survey response rate in our dedicated guide.

A Few Lingering Questions About Survey Rates

Let's clear up some of the most common questions that pop up when talking about survey response rates.

How Do I Calculate My Survey Response Rate?

Getting your response rate is pretty straightforward. You just take the number of people who actually finished your survey and divide it by the total number you sent it to. Just be sure to leave out any emails that bounced. You want a clean number here.

Then, multiply that result by 100 to see it as a percentage. So, if your survey went out to 500 people and 75 of them completed it, your response rate is a solid 15%.

Is a Low Response Rate Always a Bad Thing?

Not always. While everyone wants a high response rate, the quality of the responses you get matters more than the quantity. The real danger you need to sidestep is something called nonresponse bias.

This is what happens when the people who don't answer your survey are fundamentally different from the people who do. If that happens, your data gets skewed, and the insights you pull from it won't be reliable. As long as your respondents are a good reflection of your target audience, even a smaller number can give you incredibly valuable feedback.

How Many Reminders Should I Send?

Sending between one and three reminders is usually the sweet spot. A good rhythm is to send the initial invitation, follow up about three or four days later, and then give one final nudge a week after the first email.

Just make sure you switch up the messaging a bit each time. Remind them why their feedback is so important. This makes the request feel much more personal and less like an automated blast.

Ready to turn feedback into growth? With Surva.ai, SaaS companies can build intelligent surveys and automated churn-deflection flows that boost retention and uncover actionable insights. Start understanding your users better today.