8 Common Bad Survey Questions to Avoid in 2025

Learn to identify and fix bad survey questions. This guide covers 8 common mistakes with clear examples and expert rewrites to improve your data quality.

With customer feedback, the quality of your insights is only as good as the quality of your questions. Bad survey questions generate skewed, unreliable data that can lead your business down the wrong path. Making strategic decisions based on flawed feedback is often worse than having no data at all, resulting in wasted resources and misguided product development. Recognizing that poor wording in surveys can lead to these significant costs, investing in solid business writing skills training becomes paramount for any data-driven team.

This guide moves beyond theory to provide a practical, hands-on breakdown of the most common question-writing pitfalls. We will dissect the 8 most common types of bad survey questions that sabotage your data collection efforts, from leading and double-barreled questions to those filled with jargon or assumptions. For each type, we'll provide real-world examples of what not to do, a deep strategic analysis of why they fail, and actionable rewrites you can implement immediately. By mastering the art of question design, you can ensure the feedback you collect is accurate, insightful, and powerful enough to drive real growth for your product and company.

1. Leading Questions

Leading questions are a classic example of bad survey questions because they subtly nudge respondents toward a specific answer. Instead of collecting genuine, unbiased feedback, they contaminate your data by embedding assumptions, emotional language, or social pressure directly into the question itself. This creates confirmation bias, where you're not learning what your audience truly thinks but rather validating a preconceived notion.

The core issue with leading questions is their manipulative nature. They don't seek truth; they seek agreement. This is why they are one of the most critical types of bad survey questions to eliminate from your research.

The Problem with Biased Phrasing

Consider this common yet flawed question:

Bad Example: Don't you agree that our new user interface is much more intuitive?

This question immediately establishes a positive frame ("more intuitive") and uses a leading phrase ("Don't you agree") that implies a correct answer. Respondents may feel pressured to agree to avoid being contrarian, regardless of their actual experience. The data collected from this question won't reliably measure user sentiment; it will only measure their willingness to concur.

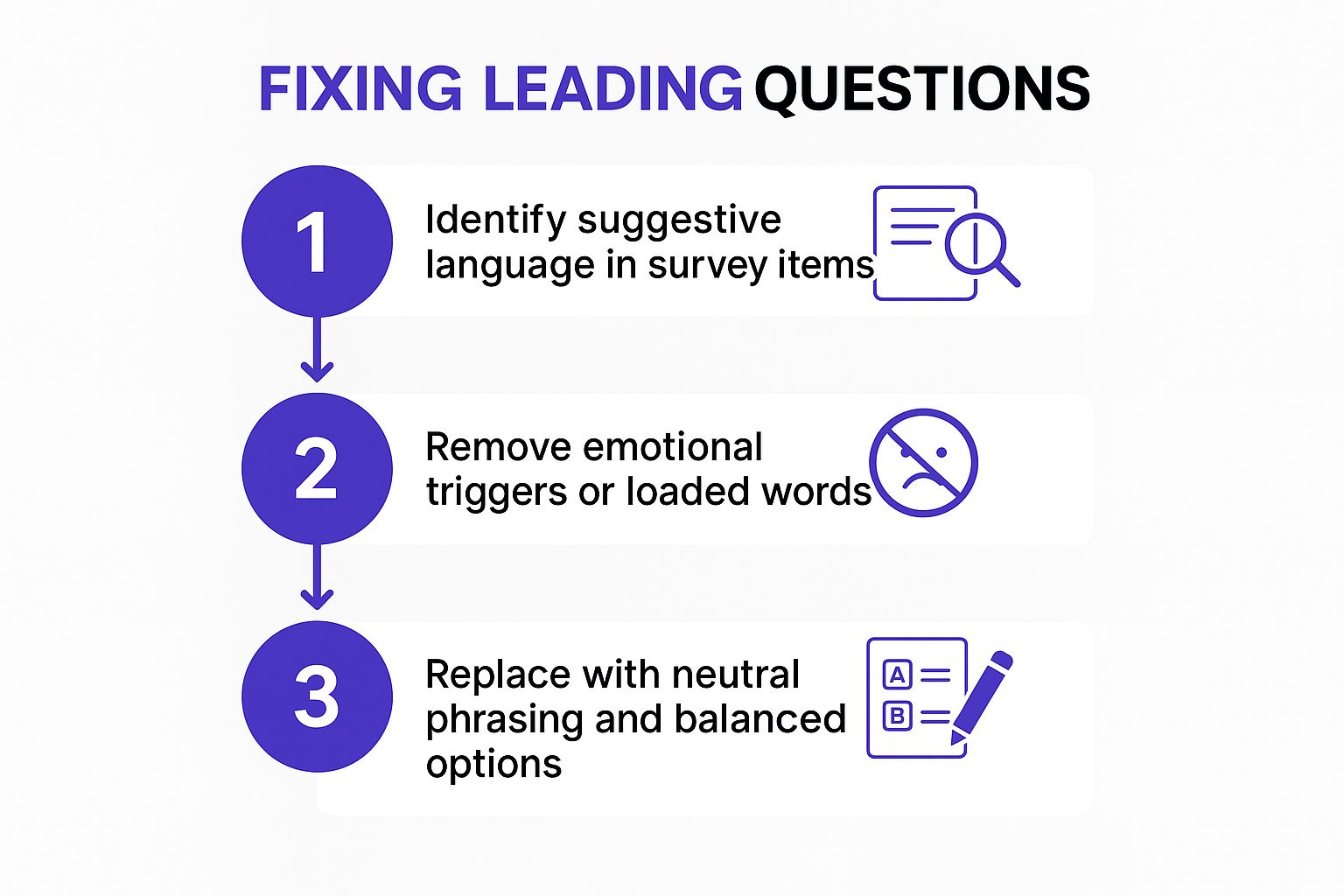

How to Fix Leading Questions

To get authentic feedback, rephrase the question to be neutral and open-ended. Focus on the user's experience without suggesting an outcome.

Good Example: How would you describe your experience with our new user interface?

This revised version removes all bias. It allows the respondent to provide honest feedback, whether positive, negative, or neutral, giving you far more valuable and actionable insights. The process of fixing these questions involves a simple, repeatable workflow.

Here is a simple process you can follow to identify and correct leading questions in your own surveys.

Following this sequence ensures you systematically strip bias from your questions, leading to more objective and reliable data collection.

2. Double-Barreled Questions

Double-barreled questions are a frequent and frustrating type of bad survey questions that force respondents to answer two different things at once. By combining distinct ideas into a single query, you make it impossible for someone to provide an accurate answer if they have different feelings about each part. This muddles your data, leaving you with ambiguous feedback that is difficult, if not impossible, to act upon.

The central problem with these questions is the resulting data ambiguity. If a respondent agrees or disagrees, you have no way of knowing which part of the question they are reacting to. This flaw makes them one of the most common yet easily avoidable bad survey questions, and rooting them out is essential for data clarity.

The Problem with Combining Concepts

Consider a question that merges two separate aspects of a user's experience:

Bad Example: How satisfied are you with our product's performance and features?

A respondent might feel the performance is excellent, but the features are lacking, or vice-versa. This question leaves no room for such nuance. It forces a single rating for two potentially unrelated topics, making the resulting satisfaction score meaningless for a product team trying to prioritize improvements.

How to Fix Double-Barreled Questions

To gather precise feedback, simply split the question into two separate, focused inquiries. Each question should address only one concept at a time.

Good Example:

- How satisfied are you with our product's performance?

- How satisfied are you with our product's features?

This approach eliminates confusion and provides clear, actionable data on two distinct areas. By separating the concepts, you can pinpoint specific strengths and weaknesses, allowing for more targeted business decisions. You can learn more by exploring some detailed examples of double-barreled questions.

Understanding how to spot these questions is a critical skill for any survey creator. The following video offers a quick tutorial on identifying and correcting them in your own research.

3. Loaded or Emotionally Charged Questions

Loaded or emotionally charged questions are a severe form of bad survey questions because they use inflammatory language and assumptions to provoke an emotional reaction. Instead of measuring a respondent's rational opinion, they manipulate the participant by tapping into biases, stereotypes, and strong feelings. This approach completely undermines data integrity by steering respondents toward an answer based on emotion, not on their actual thoughts or experiences.

The fundamental flaw of loaded questions is their inherent bias. They are designed not to collect honest feedback but to validate a pre-existing, often negative, viewpoint. This makes them one of the most destructive types of bad survey questions, as they generate data that is emotionally skewed and practically useless for objective analysis.

The Problem with Biased Phrasing

Consider this highly charged question often seen in biased market research:

Bad Example: How do you feel about the company's greedy and unfair pricing policies?

This question frames the company's pricing as "greedy and unfair" before the respondent even has a chance to answer. The use of emotionally charged adjectives makes it difficult for someone to disagree without feeling like they are defending unethical practices. The resulting data will almost certainly reflect widespread dissatisfaction, not because it's genuinely felt, but because the question was designed to produce that outcome.

How to Fix Loaded Questions

To gather authentic opinions, you must strip away all emotional language and present the topic neutrally. Rephrase the question to focus on the respondent's perception without any embedded judgment.

Good Example: How would you rate the fairness of our company's pricing?

This corrected version removes the inflammatory words "greedy" and "unfair." It allows respondents to evaluate the pricing on their own terms, whether they find it to be fair, unfair, or somewhere in between. This neutral phrasing is essential for collecting objective data that accurately reflects customer sentiment and provides actionable insights.

4. Overly Complex or Jargon-Heavy Questions

Overly complex questions are a prime example of bad survey questions because they alienate respondents with jargon, technical terms, or convoluted sentence structures. When participants can't understand what you're asking, they either guess, skip the question, or abandon the survey entirely. This undermines data quality by introducing confusion and frustration into the response process.

The core issue here is a failure in communication. Effective surveys use clear, simple language accessible to the entire target audience, not just industry insiders. Using jargon creates a barrier, making your data unreliable and potentially skewed by respondents who are either too confused or too embarrassed to answer accurately.

The Problem with Jargon-Heavy Phrasing

Consider a question that might seem normal to a product team but is baffling to a typical user:

Bad Example: What are your thoughts on our organization's synergistic approach to stakeholder value proposition enhancement?

This question is filled with corporate jargon like "synergistic," "stakeholder," and "value proposition enhancement." The average customer won't know what this means, making it impossible to provide a meaningful answer. The data collected would be useless because it doesn't reflect genuine customer sentiment, only their confusion.

How to Fix Jargon-Heavy Questions

To get authentic feedback, translate the question into simple, everyday language. Focus on the tangible benefit or experience you want to measure, avoiding abstract business terms.

Good Example: How well do you feel we are meeting the needs of everyone involved with our company?

This revised version removes the jargon and asks about a concept everyone can understand: meeting needs. It allows all respondents to provide clear, honest feedback based on their actual perceptions, giving you much more valuable and actionable insights. This simplification process is key to avoiding these types of bad survey questions.

5. Absolute or Extreme Response Options

Absolute questions are a prominent form of bad survey questions because they force respondents into making extreme, black-or-white choices. By only offering polar opposite answers like 'Always/Never' or 'Yes/No' to complex topics, they completely eliminate nuance and fail to capture the reality of your audience's experience. This all-or-nothing approach creates inaccurate data by pushing people to pick a side that doesn't truly represent their middle-ground perspective.

The core issue with absolute questions is their rigidity. They assume experiences are binary, but most customer opinions exist on a spectrum. Eliminating these inflexible response options is crucial for collecting data that reflects genuine sentiment, making them a key type of bad survey questions to avoid.

The Problem with Polarized Choices

Consider a question designed to measure customer satisfaction:

Bad Example: Are you always satisfied or never satisfied with our customer support?

This question creates a false dilemma. A customer who is usually satisfied but had one bad experience cannot answer honestly. They are forced to choose between "always" (inaccurate) or "never" (also inaccurate), leading to skewed and unreliable results. The data you collect will show an artificially polarized view of your support quality, missing the crucial details in between.

How to Fix Absolute Questions

To capture authentic feedback, you must provide a balanced scale that includes moderate options. This allows respondents to answer more accurately, reflecting the true nature of their experience.

Good Example: How satisfied are you with our customer support?

- Very Satisfied

- Somewhat Satisfied

- Neither Satisfied nor Dissatisfied

- Somewhat Dissatisfied

- Very Dissatisfied

This revised version uses a Likert scale, a common and effective tool for measuring sentiment. It gives respondents the flexibility to place their opinion accurately on a spectrum, providing you with much richer, more actionable data. To explore more effective response scales and question formats, you can learn more about the different types of survey questions on surva.ai. Offering a full range of choices ensures you are measuring reality, not forcing a distorted one.

6. Vague or Ambiguous Questions

Vague or ambiguous questions are another major category of bad survey questions because they lack the specificity needed for clear interpretation. When a question uses undefined terms, broad concepts, or lacks context, each respondent is forced to answer based on their own personal interpretation. This ambiguity introduces significant noise into your data, making it impossible to compare responses accurately.

The core issue with vague questions is the inconsistency they create. You think you're measuring one thing, but respondents are answering several different questions that exist only in their minds. This is why eliminating ambiguity is a non-negotiable step in crafting effective surveys and avoiding misleading conclusions.

The Problem with Unclear Phrasing

Consider a question that seems straightforward but is actually full of ambiguity:

Bad Example: How often do you use our product?

What does "often" mean? To one user, it could mean daily. To another, it could mean a few times a month. The term is subjective and lacks a concrete timeframe. Furthermore, what constitutes "use"? Does logging in count? Or does it require performing a specific action? The data from this question will be unreliable because the reference point varies for every participant.

How to Fix Vague or Ambiguous Questions

To get precise and comparable data, you must add specificity and provide clear, defined answer choices. Anchor the question to a concrete timeframe and define the terms you use.

Good Example: In a typical week, how many days do you log into our platform to complete a task?

- Every day (7 days a week)

- Most days (4-6 days a week)

- A few days a week (2-3 days a week)

- About once a week

- Less than once a week

- I do not log in during a typical week

This revised version removes all ambiguity. It defines the timeframe ("In a typical week"), clarifies the action ("log into our platform to complete a task"), and provides mutually exclusive, specific answer options. This ensures every respondent is answering the exact same question, resulting in clean, actionable data.

7. Questions with Inadequate Response Options

Questions with inadequate response options are a frustrating and common type of bad survey questions because they force respondents into a corner. They fail to provide a full range of choices, leading to inaccurate data when participants are compelled to select an answer that doesn't truly reflect their situation or opinion. This flaw can take many forms, including overlapping categories, missing logical options, or scales that don't match the question's intent.

The core issue here is a failure of foresight. When options are incomplete or poorly constructed, you aren't just getting bad data; you are also creating a poor user experience that can lead to survey abandonment. Fixing this is crucial for collecting data that is both accurate and comprehensive.

The Problem with Limited Choices

Consider this question asking about a user's primary device:

Bad Example: How do you primarily access our website?

- Computer

- Phone

This question immediately excludes a significant portion of users. Anyone who primarily uses a tablet, a smart TV, or another web-enabled device has no accurate option to choose. They might randomly select one, skip the question, or abandon the survey entirely. The resulting data will present a skewed and incomplete picture of device usage, potentially leading to poor strategic decisions.

How to Fix Inadequate Response Options

To gather accurate data, ensure your response options are comprehensive and mutually exclusive. Anticipate the different ways a user might answer and provide an appropriate choice for everyone.

Good Example: What is your primary device for accessing our website?

- Desktop or laptop computer

- Smartphone

- Tablet

- Smart TV

- Other (please specify)

This improved version covers the most common devices and includes a vital "Other" option. This ensures every respondent can answer accurately, providing you with clean, reliable, and complete data. When you cannot list every possibility, including an open-ended option is an effective solution. For more details on leveraging this, you can learn more about crafting effective open-ended questions.

8. Assumptive Questions

Assumptive questions are a common type of bad survey questions because they are built on a hidden premise about the respondent. They presuppose certain experiences, behaviors, or opinions are true without first confirming them, which can force participants into providing inaccurate answers or abandoning the survey altogether out of frustration.

The core issue with assumptive questions is that they invalidate the respondent's actual experience. Instead of gathering clean data, you risk collecting misleading information from people who answer a question that doesn't apply to them, simply because there is no other option. This is why eliminating them is crucial for data integrity.

The Problem with Unverified Premises

Consider this question, which might seem harmless at first glance:

Bad Example: How satisfied are you with your recent purchase from our online store?

This question wrongly assumes that every respondent has recently made a purchase. If a user is just browsing, or if their last purchase was a long time ago, they cannot answer accurately. They might either skip the question, provide a random answer that corrupts your data, or feel that the survey is not designed for them.

How to Fix Assumptive Questions

To get valid feedback, you must first qualify the respondent with a screening question. This ensures you only ask follow-up questions to the relevant audience segment.

Good Example:Q1: Have you made a purchase from our online store in the last 30 days?(If Yes, proceed to Q2)Q2: How satisfied were you with that purchase experience?

This revised, two-step approach ensures you are not making assumptions. The first question filters the audience, and the second question collects meaningful data from the right people. This logical flow is fundamental to avoiding the pitfalls of bad survey questions. By validating premises before asking for details, you gather far more reliable and actionable insights.

8 Types of Bad Survey Questions Compared

Question TypeImplementation Complexity 🔄Resource Requirements ⚡Expected Outcomes 📊Ideal Use Cases 💡Key Advantages ⭐Leading QuestionsLow - Simple to write but needs careful wordingLow - Primarily requires review and testingBiased and skewed data, reduced validitySituations needing unbiased, neutral feedbackEasy to spot and correct with proper reviewDouble-Barreled QuestionsModerate - Need to separate multiple conceptsModerate - Requires question splittingUnclear, ambiguous responsesMulti-topic surveys requiring precise dataImproves clarity and focus when correctedLoaded or Emotionally Charged QuestionsModerate - Avoid emotional or biased termsLow to Moderate - Language review neededEmotionally biased responses, lower reliabilitySensitive or controversial topics needing neutralityHelps maintain professional credibilityOverly Complex or Jargon-Heavy QuestionsHigh - Simplifying technical language is challengingModerate to High - Needs expert input and testingConfused or inaccurate responsesTechnical surveys requiring simplificationEnhances understanding and inclusivityAbsolute or Extreme Response OptionsLow - Simple scales but requires balanced optionsLow - Design of response scalesPolarized, less nuanced resultsSurveys needing nuanced, graduated response scalesCaptures full opinion spectrum when improvedVague or Ambiguous QuestionsModerate - Requires specificity and contextualizationModerate - Needs term definitions and clarificationsInconsistent, unreliable dataBroad topics needing precise measurementIncreases data reliability and actionable insightsQuestions with Inadequate Response OptionsModerate - Requires comprehensive and exclusive optionsModerate - Testing and option designIncomplete or misleading dataAny survey requiring accurate categorizationImproves respondent satisfaction and data qualityAssumptive QuestionsModerate - Needs logic checks and screening questionsModerate - Survey flow design and validationForced or inaccurate answersSurveys with conditional or follow-up questionsIncreases validity and relevance of responses

From Bad Questions to Actionable Insights

We’ve dissected the anatomy of bad survey questions, moving from the subtle misdirection of leading questions to the confusing clutter of double-barreled inquiries. Throughout this journey, a core principle has emerged: the quality of your insights is directly proportional to the quality of your questions. Asking the right way isn’t a trivial detail; it is the fundamental mechanism for collecting data you can actually trust.

Ignoring these common pitfalls leads to a cascade of negative consequences. Skewed data from loaded questions, incomplete feedback from inadequate response options, and high drop-off rates from overly complex jargon all contribute to a distorted picture of your customer's reality. Making critical product, marketing, or strategic decisions based on this flawed data is like navigating a ship with a broken compass. You're moving, but likely in the wrong direction.

Key Takeaways for Crafting Better Surveys

The journey from asking bad survey questions to gathering genuinely actionable intelligence requires a conscious shift in methodology. It's about moving from assumption to inquiry and from complexity to clarity.

Here are the essential principles to internalize:

- Neutrality is Non-Negotiable: Actively strip your questions of any leading, loaded, or emotionally charged language. Your goal is to uncover the respondent's true feelings, not to validate your own.

- Clarity and Simplicity Win: Every question should be a model of precision. Avoid jargon, break down complex ideas, and ensure there's only one, unambiguous way to interpret what you're asking.

- Focus on a Single Idea: Each question must target one specific concept. Resist the urge to combine ideas into double-barreled questions, as this guarantees muddled and unusable results.

- Empower with Comprehensive Choices: Your response options must be mutually exclusive and collectively exhaustive. Provide an "out" like "Not Applicable" or "Other" to respect the respondent's unique context and prevent forced, inaccurate answers.

The Strategic Impact of High-Quality Questions

Mastering the art of survey design is more than an academic exercise. It is a critical business competency that directly impacts your bottom line and competitive advantage. By systematically eliminating bad survey questions from your feedback loops, you unlock a powerful engine for growth.

You build deeper trust with your user base by showing you respect their time and value their unvarnished opinions. You gather precise, reliable data that empowers your product teams to build features customers actually want, helps your marketing teams craft messages that resonate, and enables your success teams to proactively address churn risks. This isn't just about data collection; it's about building a customer-centric culture fueled by authentic feedback. The result is a more resilient business, higher customer retention, and a clear path to sustainable growth.

Ready to stop guessing and start asking questions that deliver real results? Surva.ai is engineered to help you avoid these common pitfalls with AI-powered question validation and smart templates. Transform your feedback process from a source of bad data to your most powerful engine for growth by visiting Surva.ai today.