Understanding Response Rate for Surveys

What's a good response rate for surveys? This guide explains how to calculate, benchmark, and increase your survey response rates with practical tips.

What is a good response rate for a survey? It is not a single magic number. The answer is, it can swing anywhere from 5% to 30% for external surveys, but the best rate depends on who you are asking and how you are asking them.

Think of it this way: an in-app survey popping up for an active user will get a lot more attention than a cold email sent to a massive, generic list.

What Is a Good Response Rate for Surveys?

Everyone wants to know the golden number, but the answer always changes based on your audience, your goal, and your distribution channel. Setting the right expectations from the start is important to figuring out where you stand and what a realistic target looks like for your business.

It is a bit like asking for feedback in person versus leaving a note on a public bulletin board. One is direct and personal, practically guaranteeing some kind of reaction. The other is passive and broad, and you would be lucky to get a glance. The same logic applies to your surveys. The channel you pick has a massive impact on how many people will hit "submit."

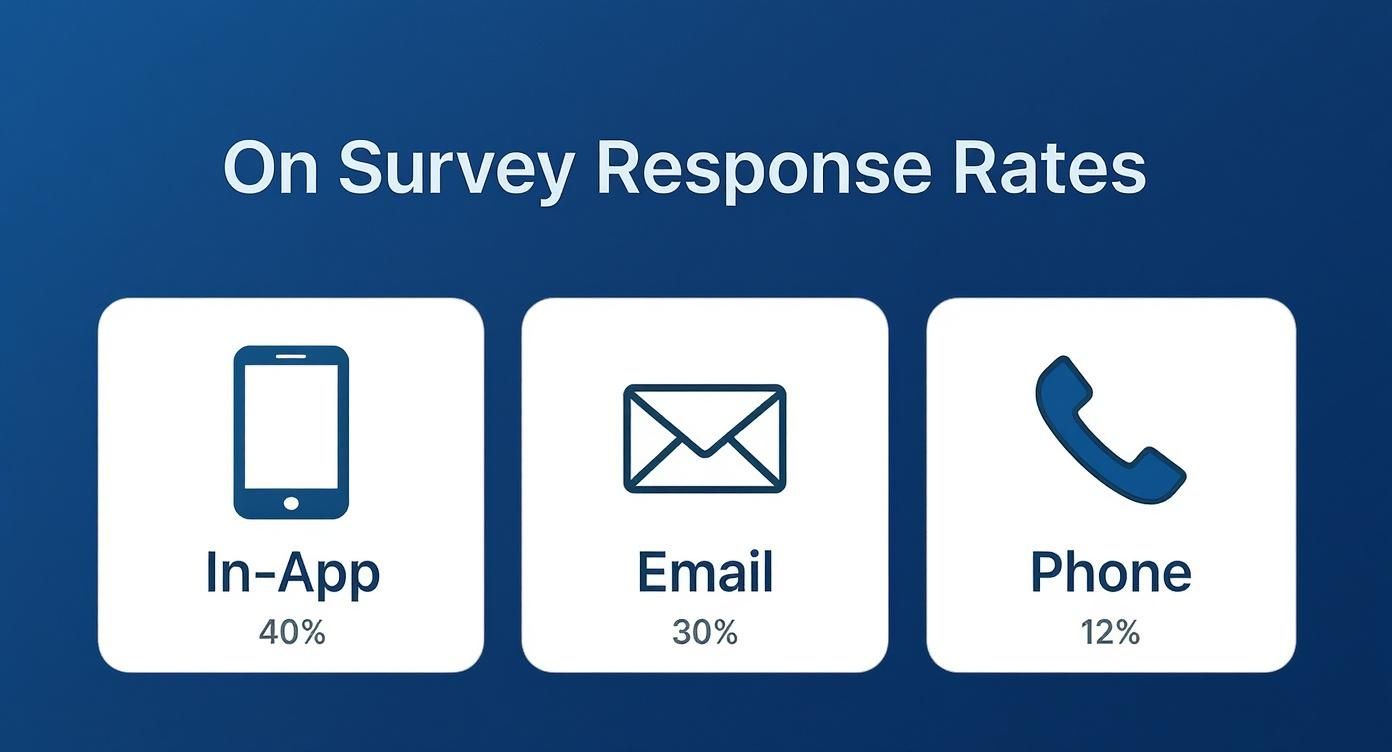

To give you a clearer picture, let's look at how different channels usually stack up, based on industry data.

This infographic breaks down the average response rates for some of the most common survey channels out there, like in-app, email, and phone calls.

As you can probably guess, in-app surveys tend to win the engagement game. Why? Because they catch users at the perfect moment while they are already actively using your product.

Why Does This Metric Matter So Much?

Keeping an eye on your response rate is about more than hitting a target. It is a check for your data's reliability.

A low response rate can be a huge red flag for something called non-response bias. This is what happens when the small group of people who answer your survey are fundamentally different from the silent majority who do not. Imagine if only your happiest superfans and your angriest critics reply. You would completely miss out on the balanced, nuanced feedback from everyone in between.

A strong response rate is your best defense against skewed data. It gives you confidence that the feedback you have collected is a fair representation of your entire audience, not just a vocal minority.

This means you can make decisions based on what most of your customers think. You can look deeper into what makes a solid benchmark by checking out our guide on the average response rate for surveys.

A good survey response rate leads to insights you can trust. It makes sure the feedback you are gathering is a solid foundation for improving products, fine-tuning your marketing, and making smarter business moves. Without it, you are just guessing.

How to Accurately Calculate Your Response Rate

So, you have sent out a survey. Now what? The first real step to figuring out if your feedback efforts are working is to calculate your survey response rate. This simple metric moves you from guessing to knowing.

The standard formula is pretty straightforward, but its power is in getting each piece right. Mess up one part, and the whole picture gets skewed.

Here is the basic formula everyone uses:

Survey Response Rate = (Total Number of Complete Responses / (Total Surveys Sent - Total Bounces)) x 100

This little equation gives you a percentage that tells you how many people in your target audience saw your survey and finished it. Let’s break it down so you can be confident your numbers are spot on.

Understanding Each Part of the Formula

To get a true read on your survey's performance, you have to be crystal clear on what each variable means. A tiny miscalculation can throw off your entire analysis, leading you to chase problems that do not exist while ignoring the ones that do.

Here’s a simple look at the key elements:

- Total Number of Complete Responses: This is pretty much what it sounds like. It is the number of people who made it all the way to the end of your survey and hit "submit." It is important not to include partial responses here, as they can muddy the waters. Before you even send the survey, define what "complete" means. For most, it is reaching that final thank-you page.

- Total Surveys Sent: This is your starting line. It’s the total number of people you invited to take the survey. If you blasted an email to 1,000 customers, this number is 1,000. Simple as that.

- Total Bounces: This one is super important for accuracy. It refers to any invitation that never made it to the recipient's inbox. Think hard bounces from invalid email addresses or even some soft bounces from temporary server issues. You must subtract these from your "Total Surveys Sent," because those people never even had a chance to say no.

A Practical Calculation Example

Let's walk through a real-world scenario. Imagine your SaaS company just rolled out a slick new feature and you are eager to get feedback from people who have used it.

You pull a segmented list of 2,000 users who have engaged with the new feature and send them a customer satisfaction survey. That is your "Total Surveys Sent."

Once the survey closes, you check your email delivery report and see that 100 of those emails bounced back because the addresses were no longer valid. These are your "Total Bounces." This means your actual, reachable audience was not 2,000, but 1,900 (2,000 - 100).

Hopping over to your survey tool, you see that 285 users completed the survey from start to finish. This is your "Total Number of Complete Responses."

Now, let's plug these numbers into our formula:

Response Rate = (285 / (2,000 - 100)) x 100

Response Rate = (285 / 1,900) x 100

Response Rate = 0.15 x 100

Boom. Your survey response rate is 15%.

Getting this calculation right gives you an honest benchmark of your survey’s health. A 15% response rate for a targeted feedback survey is a solid starting point. It tells you exactly how many people you managed to engage and gives you a clear baseline to improve on for next time.

Exploring the Decline in Survey Participation

If you feel like your survey response rates are not what they used to be, you are not alone. There is a much bigger trend at play. Over the last couple of decades, getting people to take a survey has gotten noticeably harder across the board.

A few key things are driving this shift. For starters, people are busier than ever, and their inboxes are a battlefield of competing requests. This has created a widespread problem we all know too well: survey fatigue. When every single service you use asks for feedback, it is only natural to start tuning it all out.

The Rise of Survey Fatigue

Survey fatigue is what happens when people get asked for feedback so often that they stop responding altogether. Think about it from your own perspective. You buy something online, call customer service, or even visit the doctor, and almost immediately a survey request lands in your inbox. The sheer volume of it can make even the most well-meaning request feel like another chore on the to-do list.

This is a measurable trend. Historical data shows a clear pattern: as the number of survey requests goes up, engagement goes down. The decline has been particularly sharp since the late 20th century, which happens to be right when the floodgates opened on asking for opinions.

Research that analyzed U.S. Census data found something pretty telling. Back in 1980, only about 20% of people said they had taken a survey in the last year. Fast forward to 2001, and that number rocketed to 60%. You can explore the full study on these changing survey participation trends for a deeper look.

This chart from the study paints a pretty clear picture of just how many more survey requests people were getting over those two decades.

The big takeaway here is that while companies are asking for feedback more than ever before, our willingness to give it has not kept up. This is the central challenge we are all facing when trying to collect quality feedback today.

Shifting Trust and Cultural Norms

It is not just the volume of requests, either. Broader cultural shifts are also making it tougher to get responses. A general decline in public trust is a huge factor. People are far more skeptical about how their data is being used and whether their feedback will change anything.

When people doubt their input will be valued or acted on, their motivation to participate plummets. This makes being transparent about your survey's purpose more important than ever.

Here are a few other cultural factors feeding into this trend:

- Privacy Concerns: High-profile data breaches have made everyone a lot more cautious about sharing personal information, even if a survey claims to be anonymous.

- Time Scarcity: Modern life just feels rushed. For someone juggling work, family, and a million other things, a request for "just five minutes" can feel like a big ask.

- Information Overload: We are constantly bombarded with information and requests from every direction. It is incredibly easy for a survey invitation to get lost in the noise.

Knowing these external pressures is the first step toward building a smarter survey strategy. It helps you see that a lower-than-expected response rate is not necessarily a failure on your part. It is a reflection of a much larger shift in how people behave.

With this context in mind, you can start creating surveys that are more strategic, respectful of your audience's time, and more effective. The next sections will show you exactly how.

Key Factors That Influence Survey Response Rates

Getting a solid response rate is about strategy. Several key elements work together to either encourage people to hit ‘submit’ or make them close the tab in frustration. Once you understand these factors, you can stop just hoping for feedback and start designing surveys that get results.

Think of it in four main buckets: how the survey is designed, who you are asking, what motivation you are providing, and how you deliver it. Nailing these can be the difference between a few scattered replies and a goldmine of useful data.

Survey Design and Structure

The way you build your survey is your first and most important hurdle. A poorly designed survey creates friction, and friction is the enemy of completion. A simple, clear, and logical structure respects the user's time and makes the whole process feel effortless.

One of the biggest culprits is survey length. Let's be real, people are busy and their attention spans are short. Research consistently shows that surveys taking longer than 7-12 minutes see a sharp drop-off in completion. Shorter is almost always better. In fact, surveys with just 1-3 questions can hit completion rates over 80%.

Think of your survey like a conversation. A good conversation is focused, relevant, and does not drag on forever. Your survey should feel the same way, asking only what is absolutely necessary to achieve your goal.

The clarity of your questions is just as important. Steer clear of industry jargon, vague wording, or those tricky double-barreled questions that try to ask two things at once. Each question should be a breeze to understand and answer without much thought. For a deeper look, you can explore these survey design best practices to make sure every question counts.

Finally, mobile optimization is completely non-negotiable today. A huge chunk of your audience will open your survey on their phone. If it is not mobile-friendly, they will abandon it in a heartbeat. A responsive layout with big, easy-to-tap buttons and readable text is a basic requirement.

Audience and Relationship

Who you ask is just as important as what you ask. Your existing relationship with your audience has a massive impact on whether they will even bother to respond. It’s no surprise that engaged, loyal customers are far more likely to offer feedback than a cold list of contacts.

Sending the right survey to the right people is key. If you send a detailed product feedback survey to customers who have not used that feature, you are just asking for low engagement and bad data. Proper segmentation makes sure your questions are actually relevant to the person on the other end.

This relevance builds trust. People are much more willing to respond when they feel the survey speaks directly to their experience and believe their feedback will be heard.

- Existing Customers: People who already have a relationship with your brand are your best bet. They have context and a real interest in seeing you improve.

- Brand Loyalty: Customers who feel a genuine connection to your brand are more likely to participate. They see it as a way of contributing to something they care about.

- Perceived Impact: If people believe their feedback will lead to real changes, their motivation to respond skyrockets.

Incentives and Motivation

Sometimes, a little nudge can go a long way. Incentives can give your response rate a serious boost, but you have to be smart about it to avoid accidentally skewing your results.

Monetary rewards are powerful. Even a small $1 reward has been shown to more than double participation in some studies. The catch? Incentives can introduce bias. Respondents motivated only by a prize might rush through the questions without much thought, or you might attract a specific type of person who does not represent your broader customer base.

Here are a few types of incentives to consider:

- Discounts: Offer a percentage off their next purchase.

- Content Upgrades: Provide access to exclusive goodies like an ebook or a webinar.

- Gift Cards: A small gift card to a popular spot like Amazon or Starbucks can be very effective.

The trick is to match the incentive to your audience and the effort you are asking for. A long, complex survey might call for a bigger reward, while a quick two-question poll might not need one at all.

Distribution and Timing

How and when you send your survey are the final pieces of the puzzle. Hitting the right channel at the right time meets your audience where they are, making it super convenient for them to respond.

The timing of your invitation can have a huge effect. Data shows that sending surveys right after an interaction, like a recent purchase or a resolved support ticket, nets much better feedback. In fact, people provide 40% more actionable feedback immediately after an experience compared to just 24 hours later.

Weekdays generally see higher response rates, with Wednesday and Thursday often being the sweet spot. Sending them in the morning before 10 AM or in the mid-afternoon around 2-3 PM can also catch people during lulls in their day. Finally, picking the right channel, whether it is email, an in-app pop-up, or an SMS, makes sure your request gets seen by the right people at the perfect moment.

To put it all together, here’s a quick look at how different factors can either help or hurt your survey response rates.

Impact of Different Factors on Response Rates

Getting these elements right is a game-changer. By focusing on creating a positive, low-friction experience for your users, you are showing them you value their time.

Practical Strategies to Improve Your Response Rate

Knowing what influences your response rate is one thing, but actively improving it is another game entirely. Getting more people to hit "submit" boils down to creating a survey experience that feels valuable, relevant, and effortless.

Let's look at some proven strategies you can put into action right away.

Think of this as a practical toolkit for your next survey campaign. Each tip is grounded in user experience and a little bit of human psychology, all designed to remove friction and boost your respondents' motivation.

Make a Strong First Impression

How you invite someone to your survey sets the entire tone. A generic, robotic request is just too easy to ignore. On the other hand, a thoughtful and personalized invitation makes people feel seen and valued right from the start.

This all begins with the very first thing they see: the subject line. Your subject line is your one and only shot to stand out in a ridiculously crowded inbox. Instead of a bland label like "Customer Survey," try framing it as a direct benefit to them.

For example, a subject line like, "Help us improve your product experience" feels far more personal and impactful than a simple request for feedback. We have put together a full guide with dozens of high-performing survey email subject lines you can steal for inspiration.

Keep It Short and Focused

One of the biggest reasons people abandon surveys is sheer length. In a world of short attention spans, a long survey feels like a massive commitment. Respecting your audience's time is maybe the single most important thing you can do to bump up your response rate.

Aim to keep your survey under five minutes. Research shows that surveys lasting longer than 12 minutes see a massive drop-off in completion rates. Shorter is almost always better.

To pull this off, you need to be ruthless with your questions. Only ask what is absolutely necessary to meet your goal. If you can get the information from existing data, do not ask for it again. Every single question should have a crystal-clear purpose.

Optimize for Every Device

These days, a huge chunk of your audience will open your survey on a mobile device. If the experience is clunky, requiring pinching and zooming, or featuring tiny buttons that are impossible to tap, you have already lost them.

Mobile optimization is a fundamental requirement. Your survey must have a responsive design that looks and works perfectly on any screen size. This includes:

- Readable Text: Font sizes should be large enough to read comfortably on a small screen.

- Large Buttons: Make sure radio buttons, checkboxes, and submission buttons are easy to tap with a thumb.

- Simple Layout: Avoid complex grids or layouts that do not translate well to a vertical screen.

A seamless mobile experience removes a major point of friction and makes sure you are not alienating a huge part of your audience right off the bat.

Be Clear About Your Purpose

Why should someone take your survey in the first place? People are far more likely to participate if they know the "why" behind your request. A brief, transparent intro can make a world of difference.

Clearly state what you are trying to achieve with the feedback and how it will be used. For instance, you could say, "Your feedback will help us prioritize new features for the next quarter." This shows people their opinion will have a real, tangible impact.

Use Reminders Thoughtfully

Life gets busy. Sometimes even people who fully intend to respond simply forget. A well-timed reminder can significantly lift your response rate without being annoying. The key is to be strategic.

A great way to boost participation is by sending effective email reminders that get opened. Do not just resend the same invitation. A gentle nudge that acknowledges their busy schedule and reiterates the value of their feedback is much more effective. Sending one or two reminders is standard practice, but just be sure to exclude anyone who has already completed the survey

The Business Impact of a Low Response Rate

It is easy to dismiss a low survey response rate as just another number, a simple vanity metric that does not mean much. But that is a dangerous assumption. The real problem runs much deeper, risking critical business decisions based on a completely distorted picture of reality. When participation is low, you open the door to a serious issue known as non-response bias.

This bias creeps in when the small group of people who answer your survey are fundamentally different from the much larger group who ignore it. That gap can create severely skewed data that does not represent your true customer base at all.

Skewed Data and Poor Decisions

Let’s say you send a product feedback survey to thousands of users. If the only people who bother to reply are your most die-hard fans and your most frustrated critics, you have completely missed the perspective of the silent majority. Those are the everyday, average users who likely make up the bulk of your audience.

Acting on this kind of incomplete data is a recipe for disaster. You might pour thousands of dollars into developing a niche feature that only a small, vocal minority clamored for. Or worse, you could overhaul a core system that most of your users were perfectly happy with, all because a few loud voices dominated the feedback.

A low response rate means you’re listening to an echo chamber, not your market. The insights might feel interesting, but they are not a reliable foundation for your business strategy.

This is not a new problem. Declining engagement is a widespread trend. For instance, a series of national health surveys in the UK showed a dramatic drop in participation over several decades. One long-term study saw response rates plummet from 91% in 1985 to just 51% by 2010. Another saw a similar freefall, dropping from 67.4% in 1995 to a low of 29.0% in 2018. You can dig into the full analysis of these long-term survey participation trends.

Wasted Resources and Brand Damage

Surveys with poor participation do not just generate bad data; they actively waste valuable resources. Think about the time, money, and effort that went into creating and sending that survey, all for insights you cannot trust.

There is also the risk of damaging your brand. Constantly bugging people with surveys they ignore can make your company seem out of touch, or just plain annoying. Over time, you can train your audience to tune out all of your communications.

A high response rate is a business necessity for gathering trustworthy insights and making smart, informed decisions.

Got Questions About Survey Response Rates?

We have covered a lot of ground, from designing surveys to getting them in front of the right people. But you probably still have a few questions. To wrap things up, here are some quick answers to the things we get asked most often.

What's a Good Survey Response Rate in 2024?

There is no magic number, but a healthy range for most digital surveys is between 15% and 30%. The truth is, "good" depends entirely on your channel and your audience.

In-app surveys can pull in a solid 20-30%, while a well-timed SMS survey might even hit 40-50%. A 50% rate is fantastic, but even a 10% rate from a cold email list could be a win. The key is setting realistic goals for your specific situation.

Does Survey Length Really Matter?

Yes. It matters more than almost anything else. People are busy, and a long survey feels like a chore they did not sign up for.

To get the most responses, aim to keep your survey under five minutes. Research shows that once a survey takes longer than 12 minutes to complete, you will see a major drop-off in completions.

Every question you add is another reason for someone to close the tab. Be ruthless and ask only what you need to know.

Should I Use Incentives to Boost My Rate?

Incentives like discounts, freebies, or small gift cards can definitely move the needle. Sometimes, a small reward can even double your response rate. But you have to be smart about it.

The danger is attracting people who just want the prize, not those who want to give you honest feedback. This can skew your results. The best approach is to match the incentive to the effort required and make sure it’s something your actual customers would value.

How Often Can I Survey My Customers?

This really depends on your business model and how frequently customers interact with you. Bombard them with surveys, and you’ll get "survey fatigue," a fast track to the ignore button.

- For B2B companies: A quarterly check-in is usually a good rhythm.

- For B2C companies: Tie your survey frequency to key interactions, but always give people at least a couple of months of breathing room between requests.

Why Are Telephone Survey Rates So Low These Days?

Telephone survey response rates have absolutely plummeted, thanks to technology and our collective hatred of spam calls. Pew Research Center’s phone polls, for example, saw response rates drop to just 6% in 2018, down from 9% just a few years earlier.

This is not surprising. The explosion of robocalls has trained us all to be wary of answering unknown numbers. You can learn more about the decline in telephone survey responses.

Ready to turn your customer feedback into actionable growth? Surva.ai gives you the tools to create targeted surveys, understand why users churn, and collect powerful testimonials. Start improving your response rates and retention today.