Eliminate Bias Survey Questions for Accurate Data

Learn to identify and fix bias survey questions. Get actionable tips and clear examples to create neutral surveys for truly accurate and reliable data.

Ever spent weeks perfecting a survey, only to collect data that points you in the completely wrong direction? It’s a gut-wrenching feeling. Often, the problem is not your topic. The hidden biases in your questions quietly corrupted the results from the very beginning.

Bias survey questions are questions that unintentionally nudge a respondent toward a specific answer. This can happen through leading language, unbalanced answer options, or by framing a question in a way that makes one response seem more socially acceptable than another. The end result is inaccurate data you cannot trust.

How Hidden Biases Can Skew Your Survey Results

Even the most well-intentioned surveys can produce flawed data. The core issue is that human psychology is complex. The way we ask questions can trigger subconscious reactions that distort a person's true feelings or opinions.

The Real-World Impact of Survey Bias

Even with a massive sample size, bias can render your data completely useless.

One of the most famous examples of this is the 1936 Literary Digest presidential poll. They surveyed over two million people and confidently predicted a landslide victory for Alf Landon over Franklin D. Roosevelt. In reality, Roosevelt won by a huge margin.

So, what went wrong? The poll failed because its sample came from telephone directories and car registrations, which overrepresented wealthier Americans at the time. This is a classic case of selection bias. It is a powerful lesson that the quality of your sample matters far more than its size. You can find more fascinating stories about historical survey blunders at MeasuringU.com.

Key Takeaway: Flawed survey data does not just give you a slightly off picture. It can lead you to make confident decisions based on completely wrong information, wasting time, budget, and resources.

Every survey creator needs to be aware of these psychological pitfalls. Recognizing them is the first real step to writing neutral questions that capture honest, actionable feedback. Without this awareness, you risk building your entire business strategy on a foundation of faulty assumptions.

Common Survey Biases at a Glance

Different types of bias can poison your data in unique ways. Once you learn to tell them apart, you’ll start spotting them everywhere, especially in your own survey drafts.

This quick-reference table can help you identify the most common culprits.

Common Survey Biases and Their Impact

Bias TypeWhat It IsExample EffectSelection BiasWhen your survey sample is not representative of the target population.A tech survey sent only to early adopters will show overly positive results for new features.Response BiasWhen respondents answer questions inaccurately or untruthfully.A person might overstate their gym attendance to appear healthier and more active.Leading QuestionsWording that subtly pushes the respondent toward a specific answer.Asking "How amazing was our new feature?" discourages neutral or negative feedback.Social DesirabilityPeople answer questions in a way that will be viewed favorably by others.Respondents might not admit to behaviors seen as negative, like wasting food or not recycling.

Getting a handle on these biases is fundamental. By keeping them in mind during the design process, you can actively work to eliminate them and verify the data you collect is a true reflection of your audience's views.

How Question Wording Creates Unintentional Bias

The words you choose for a survey are never just words. They are powerful tools. Get them right, and you will get honest, clear answers. Get them wrong, and you will end up with a confusing mess of biased responses.

It is surprising how tiny, innocent-looking changes in phrasing can completely change how someone reads a question and how they answer it.

This is where so many surveys stumble. You might think you are asking a straightforward question, but if it is packed with loaded terms or emotional language, you are not measuring opinion anymore. You are shaping it. The goal is to make your language so neutral it practically disappears, letting the respondent's genuine thoughts come through.

The Power of Leading and Loaded Questions

Leading questions are sneaky. They subtly nudge a respondent to a specific answer by building in an assumption or using language that hints at a "correct" choice. Loaded questions are their more aggressive cousin, using emotionally charged words to trigger a strong reaction.

Here is a classic example:

- The Problem: The term "award-winning" sets a positive expectation right away. It makes it socially awkward for someone to say they had a bad experience, as it goes against the "excellent" image you have just painted.

- The Fix: This version is completely impartial. It strips away the positive framing, opening the door for a full range of answers, from ecstatic to deeply disappointed.

A fascinating political survey really drives this point home. When a question about allowing a controversial group to hold a rally was framed with “Given the risk of violence,” only 45% of people agreed. But when the question was rephrased to start with “Given the importance of free speech,” support shot up to 85%.

That is a 40-point swing from a simple change in wording. It is a stark reminder of how much words matter. You can read more about these research findings to see just how deeply language can sway public opinion. For an even deeper look into writing better questions, take a look at our guide on how to write survey questions.

Ambiguity: The Silent Data Killer

Another trap that is easy to fall into is using ambiguous or vague language. If your respondent has to stop and guess what you really mean, their answer is practically useless. Words like "often," "regularly," or "sometimes" mean completely different things to different people.

Think about it. Asking "Do you use our product regularly?" is a data nightmare. For one person, "regularly" could mean every single day. For someone else, it might mean once a month. The data you get back will be inconsistent and impossible to analyze with any accuracy.

The solution is to be incredibly specific.

- Biased Question: "Do you use our product regularly?"

- Neutral Question: "In a typical week, how many days do you use our product?"

The improved version swaps a subjective term ("regularly") for a concrete, measurable one ("in a typical week"). This forces a specific answer and guarantees every response is measured against the same clear standard. By being precise and neutral, you eliminate the guesswork and get much closer to the truth.

It is not just the words you choose. The very structure of your questions can sneak significant bias into your survey results. Some question formats look perfectly fine on the surface, but they are actually designed in a way that can confuse respondents or corner them into giving an answer that is not quite right.

Getting good at spotting these structural traps is just as important as picking neutral language.

Three of the most common offenders I see are double-barreled questions, questions with absolutes, and assumption-based questions. Each one messes with your data in its own unique way by making it tough for someone to answer honestly. The good news is that once you know what to look for, they are surprisingly easy to take apart and fix.

Common Structural Flaws

A double-barreled question is a classic survey mistake where you try to ask two things at once. The person taking the survey might agree with one part but disagree with the other, which leaves them stuck. Think of a question like, "Was our website design attractive and easy to navigate?" What if it was beautiful but a total nightmare to use? There is no way to answer that accurately.

Then you have questions with absolutes. These use all-or-nothing words like "always," "every," or "never." These words back respondents into a corner because real life is rarely that black and white. Very few people can honestly say they "always" do something, so these questions often just get you a lot of inaccurate 'no' answers.

Finally, there are assumption-based questions. These incorrectly assume something about the respondent's experience or opinion. A question like, "What do you find most frustrating about our mobile app?" takes for granted that the user has a frustration. If they love the app and have no complaints, they have no way to answer.

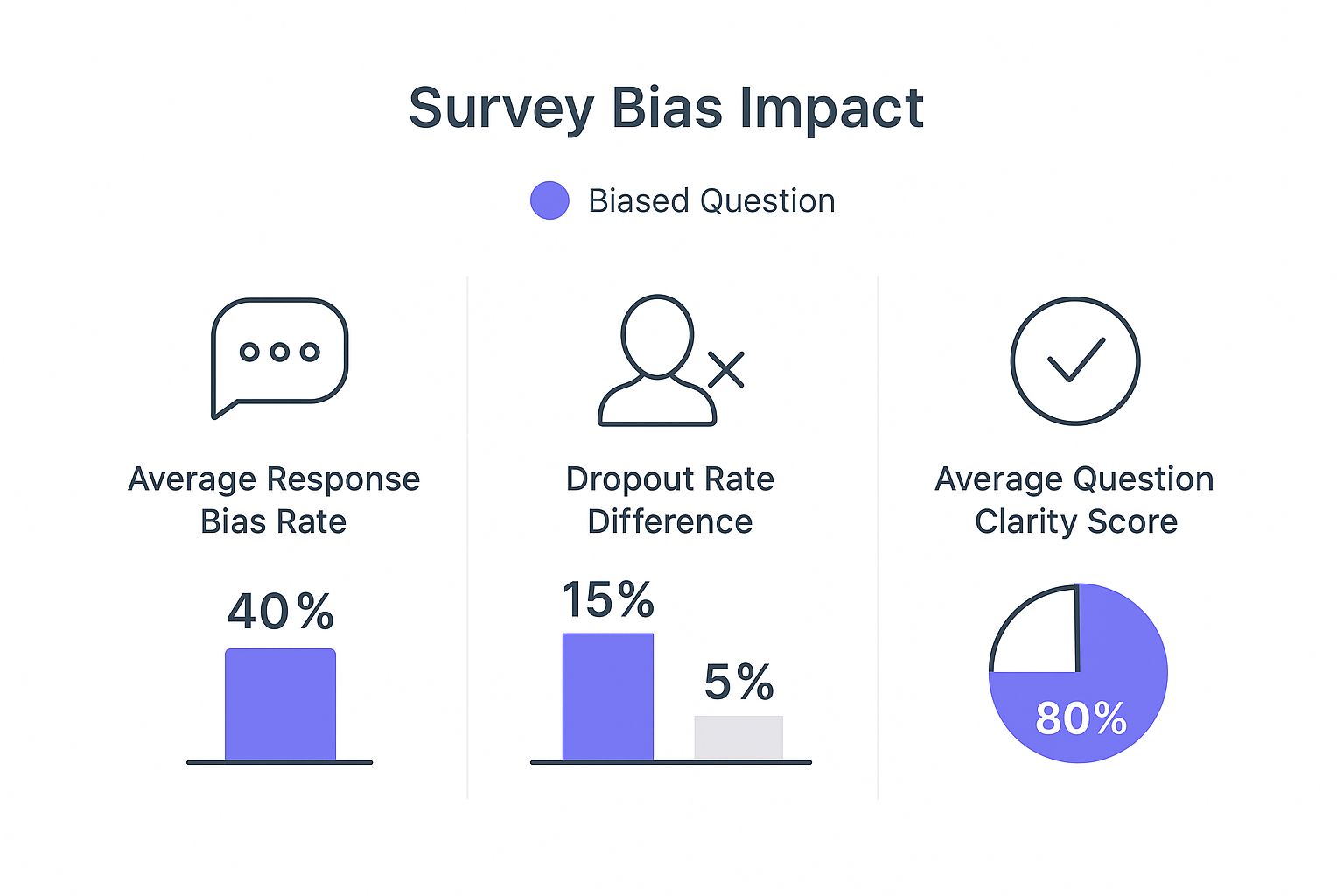

These flawed structures do real damage to your data quality. This infographic shows just how much biased questions can impact everything from response accuracy to survey completion rates.

As you can see, simply shifting from biased to neutral questions can significantly cut down on how many people abandon your survey and will dramatically improve the clarity of the feedback you receive.

Rewriting Biased Questions for Clarity and Neutrality

Fixing these issues is usually a matter of breaking down a complicated question into simpler, more direct ones. The goal is to ask one clean question at a time. For more on this, our guide on the closed-ended question has some great tips for creating straightforward options.

The best practice is to always ask one clear, specific question at a time. This guarantees that the answer you receive is a direct response to a single idea, not a muddled reaction to multiple concepts at once.

To see this in action, the table below shows how you can transform these common biased questions into clear, neutral alternatives that will give you much more reliable data.

Rewriting Biased Questions for Clarity and Neutrality

Biased Question TypeProblematic ExampleImproved Neutral VersionDouble-BarreledHow satisfied are you with the speed and reliability of our service?Please rate your satisfaction with the speed of our service. (Followed by a separate question for reliability)AbsolutesDo you always check your email before starting your workday?In a typical week, how often do you check your email before starting your workday?Assumption-BasedWhich new features would you like to see in our next software update?Are there any new features you would like to see in our next software update?

By breaking down questions and checking that each one is focused, fair, and free of assumptions, you pave the way for data you can actually trust. It is a small change in process that makes a huge difference in the outcome.

Addressing Cultural and Social Desirability Biases

Beyond just the words you choose, some of the trickiest survey biases are tangled up in basic human nature. We are all wired to want to fit in, and that gives rise to social desirability bias. It is that little voice in our head that nudges us to give answers that make us look good, rather than what is actually true.

Think about it. When you ask someone about their charitable donations or how often they eat healthy, people tend to overstate the good stuff. It is not always a conscious lie. It is a subconscious pull to align with what we think society expects. This creates a dangerous gap between what people say and what they do, which can send your data way off course.

Then there is acquiescence bias, which is basically the tendency to be a "yea-sayer." This is that natural inclination to agree with statements, especially if the respondent is unsure or just wants to be helpful and get through the survey. If your survey is packed with "agree/disagree" questions, you are practically rolling out the red carpet for this bias.

Navigating Cultural Differences in Surveys

These biases get even more complicated when you are running surveys across different cultures. What is considered a socially acceptable answer in one country might be a total miss in another. Even the way people respond to scales can vary dramatically.

And this is not just a minor detail. Global research proves it. A 2016 study, for instance, found that respondents in Latin America were more likely to show acquiescence bias and pick extreme answers on a scale. Meanwhile, respondents in Asia often leaned toward the midpoint. The same research pointed to higher rates of socially desirable answers in countries like Italy, Singapore, China, and the U.S. You can look deeper into these cultural tendencies in market research to see just how much geography can shape data.

Key Insight: Ignoring cultural context is a recipe for flawed data. A survey that works perfectly in one country might fail spectacularly in another simply because of different communication norms and social values.

Strategies for More Honest Feedback

To get past these all-too-human tendencies, you need to be a bit more clever with your question design. The goal is to create a space where people feel comfortable and safe enough to be completely honest.

Frame Sensitive Questions Carefully

When you are asking about sensitive topics like income, personal habits, or controversial opinions, the setup is everything.

- Normalize the Behavior: Start by making the behavior sound common and understandable. Instead of asking, "Do you ever miss a credit card payment?" try a softer approach. "Many people find it difficult to make every credit card payment on time. Has this ever happened to you in the last year?" This simple rephrasing lowers the shame barrier and invites a more truthful answer.

Use Indirect Questioning Techniques

Sometimes, the best way to get a direct answer is to ask an indirect question. Instead of asking someone about their own behavior, ask them what they think other people do.

- Projection: Try a question like, "What are some reasons you think people might not recycle regularly?" This technique allows respondents to project their own feelings and reasons onto a hypothetical "other," making it less personal and much easier to answer honestly.

Balance Your Question Scales

If you are using scales, make sure your options are balanced and do not subtly nudge respondents in one direction. An unbalanced scale is a biased scale.

- Excellent

- Good

- Average

- Needs improvement

- Poor

This kind of balanced structure gives equal weight to both positive and negative options, removing any subtle pressure to choose a "good" answer. By being mindful of these human and cultural factors, you can build surveys that capture what people really think, not just what they think you want to hear.

Practical Steps for Neutral Survey Design

Crafting a genuinely neutral survey is about more than just picking the right words. It is about designing the entire experience to sidestep bias from the moment a person starts to the moment they hit "submit." A solid approach means looking at the big picture, from how you sequence your questions to the answer choices you offer.

One of the most valuable, and most frequently skipped, steps is the pilot test. Before you launch your survey to everyone, run it by a small, representative group first. Pay close attention to where people pause, get confused, or give feedback you did not see coming. This trial run is your best shot at catching awkward phrasing or hidden biases before they mess up your real data.

Smart Question Ordering and Answer Scales

The order of your questions can quietly nudge people's answers, a sneaky effect known as priming. For instance, if you ask a bunch of questions about product frustrations right before a general satisfaction question, you are likely to get a more negative score than you otherwise would have.

To sidestep this, think about the flow:

- Start Broad, Then Get Specific: Ease respondents in with general, easy questions. Once they are warmed up, you can move into more detailed or sensitive topics.

- Group Similar Topics: Keep questions about the same subject together. This creates a logical flow and prevents the mental whiplash of jumping between unrelated ideas.

- Randomize Where Possible: If you have long lists, like features to rate, randomizing the order for each person helps prevent order bias, where items at the top of a list naturally get more attention.

A key part of neutral design is giving respondents a way out. Forcing someone to choose an answer that does not fit their situation introduces noise into your data. Always include options like "Not Applicable" or "Don't Know" when appropriate.

This simple move respects the respondent's reality and makes your data cleaner. For more ideas on getting quality responses, you can find helpful tips to improve survey response rates in our guide.

Build a Methodologically Sound Survey

Building a survey that gives you clean, reliable data means thinking about the entire user journey. To put effective strategies into practice, you can use specialized tools for creating and sending out your questions. For example, embedding surveys from platforms like Typeform often comes with built-in features that guide you toward a more logical question structure.

The answer scales you use are just as important as your questions. An unbalanced scale can completely skew your results. Think about a scale with four positive options and only one negative one ("Excellent," "Very Good," "Good," "Fair," "Poor"). It is basically pushing people toward a positive answer.

The goal is to always use symmetrical and balanced scales. Make sure you have an equal number of positive and negative options on either side of a true neutral midpoint. This structure allows for a full spectrum of opinion without nudging anyone in a particular direction. When you combine smart ordering, balanced scales, and thorough testing, you end up with a survey that is built to capture honest, reliable insights.

Even after you get the hang of writing better surveys, a few tricky questions always seem to pop up. Let’s tackle some of the most common ones I hear from people trying to get their surveys right.

How Can I Spot Bias in Survey Data I Already Have?

Looking for bias in data you have already collected can feel like detective work, but it is definitely doable. The first place to look is the source: the original survey questions and who you sent them to.

Were the questions leading or loaded with emotional language? Was your sample a good mix of your target audience, or did it accidentally lean heavily toward one specific group? Answering these questions gives you your first set of clues.

Next, you have to dig into the response patterns themselves. Look for red flags like:

- Overwhelmingly positive answers. Did almost everyone pick the most glowing option? That could be a sign of social desirability bias or a poorly phrased leading question.

- A rush to the first option. Seeing a lot of people choose the very first answer in a multiple-choice list? That might be satisficing, where respondents just pick the easiest, quickest option to get it over with.

- A sudden drop-off. If a lot of people bailed on a specific question, it is a big hint that it might have been confusing, too sensitive, or just plain poorly written.

Finding these signs helps you see which parts of your data might be shaky. You cannot magically fix biased data after the fact, but knowing where the weak spots are means you can treat those "insights" with the healthy dose of skepticism they deserve.

What Are the Ethical Implications of Using Biased Questions?

This one is a big deal. Using biased questions, even by accident, has serious ethical consequences. At its core, it is a form of manipulation. You are not actually gathering honest opinions; you are steering people to the answers you want, which can lead to terrible business decisions that ultimately hurt your customers.

The Ethical Bottom Line: When you make decisions based on biased data, you are building your strategy on a foundation of falsehoods. This is how companies end up launching products nobody needs, rolling out marketing campaigns that fall flat, or completely missing the real problems their customers are facing.

Ethically, a survey is a promise. It is a promise to give your audience a voice. Biased questions break that promise by silencing their real thoughts and replacing them with a distorted echo of your own assumptions. It erodes the trust between you and your respondents, who think they are giving you helpful feedback when their true perspectives are being ignored.

Respecting your audience means giving them a fair, neutral platform to share what they genuinely think. Anything less is not just bad research; it is bad faith.

What Tools Can Help Me Create Neutral Surveys?

While nothing beats thoughtful, careful question design, some great tools can act as a safety net. Many modern survey platforms are built with features specifically designed to help you sidestep common biases.

When you are choosing a tool, look for software that offers:

- Question randomization to help neutralize order bias.

- Logic branching so you are only asking people relevant follow-up questions.

- Vetted templates that are already designed for neutrality.

- A/B testing features to see how small changes in wording can affect results.

Some of the more advanced platforms, like Surva.ai, even use AI to scan your questions and flag potentially leading or ambiguous language before you hit send. Think of these tools as a second pair of eyes, helping you catch the subtle mistakes that are all too easy to miss on your own.

Ready to build surveys that deliver accurate, actionable insights? Surva.ai gives you the AI-powered tools to eliminate bias, understand your users, and drive growth. Start turning feedback into results today by visiting https://www.surva.ai.