Using Closed Ended Questions for Clearer Survey Data

Learn to use a closed ended question to get clear, actionable data. Our guide offers practical tips for better survey design and analysis.

A closed-ended question is direct: it gives people a fixed list of answers to choose from. Think "Yes" or "No," or a classic multiple-choice selection. It is like ordering from a set menu at a restaurant; you pick from the specific options provided.

This approach simplifies the experience for the person taking your survey and gives you structured, measurable data that is easy to analyze.

The Foundation of Structured Survey Data

A closed-ended question is about efficiency and clarity. By limiting the possible responses, you guide the user toward a specific type of answer. This is the key to collecting clean data because it gets rid of the ambiguity that comes with open-ended, free-form text.

Instead of trying to make sense of dozens of unique, subjective answers, you get organized data points ready for instant analysis.

This direct approach works well in digital surveys. Closed-ended questions are the backbone of quantitative research, particularly for mobile and online surveys where getting people to the finish line quickly is important. Their design, often using simple radio buttons or drop-down menus, typically leads to higher response rates and much faster data collection. You can learn more about how question structure affects survey performance in this great breakdown of data collection efficiency from Pollfish.com.

How It Works in Practice

Let's say you want to gauge user satisfaction with a new feature in your SaaS product. Asking something vague like, "What do you think of the new dashboard?" can produce messy, hard-to-quantify feedback.

Instead, a closed-ended question brings structure: "On a scale of 1 to 5, how satisfied are you with the new dashboard?"

This image shows a perfect example of how a closed-ended question presents clear, predefined choices, making it easy for anyone to respond.

This format makes it simple for a user to give feedback and allows you to categorize that feedback instantly. No guesswork is required.

The structured nature of these questions is what makes them so powerful for tracking metrics over time. For example, you can easily compare satisfaction scores month-over-month to see if your product updates are hitting the mark. This is how you turn a jumble of opinions into a source of measurable, actionable business intelligence.

Choosing Between Closed and Open Ended Questions

Figuring out whether to use a closed-ended or an open-ended question comes down to what you're trying to learn. It helps to think of it like this: a closed-ended question is like running a quick poll. It gives you the "what"—the hard numbers and percentages perfect for statistical analysis. On the other hand, an open-ended question is more like conducting an interview; it helps you uncover the "why" behind those numbers.

Closed-ended questions are designed for efficiency and scale. They generate quantitative data, which means the answers are numerical and very easy to sort and analyze. This makes them ideal for tracking metrics over time or comparing how different groups of users respond. For example, you can quickly see if your Net Promoter Score has ticked up since your last big product release.

In contrast, open-ended questions deliver qualitative data. These answers are rich, descriptive, and full of context. While this kind of information is gold for exploring new ideas or getting a feel for customer sentiment, it takes more time and thought to analyze. You can't just pop descriptive sentences into a spreadsheet and get a neat chart.

The Right Question for the Right Goal

Your research goal should always be your guide. If you need clean, comparable data to measure specific outcomes, closed-ended questions are your best bet. But if you're just starting to explore a problem and you don't even know what all the possible answers might be, open-ended questions will give you the detailed, unfiltered feedback you need.

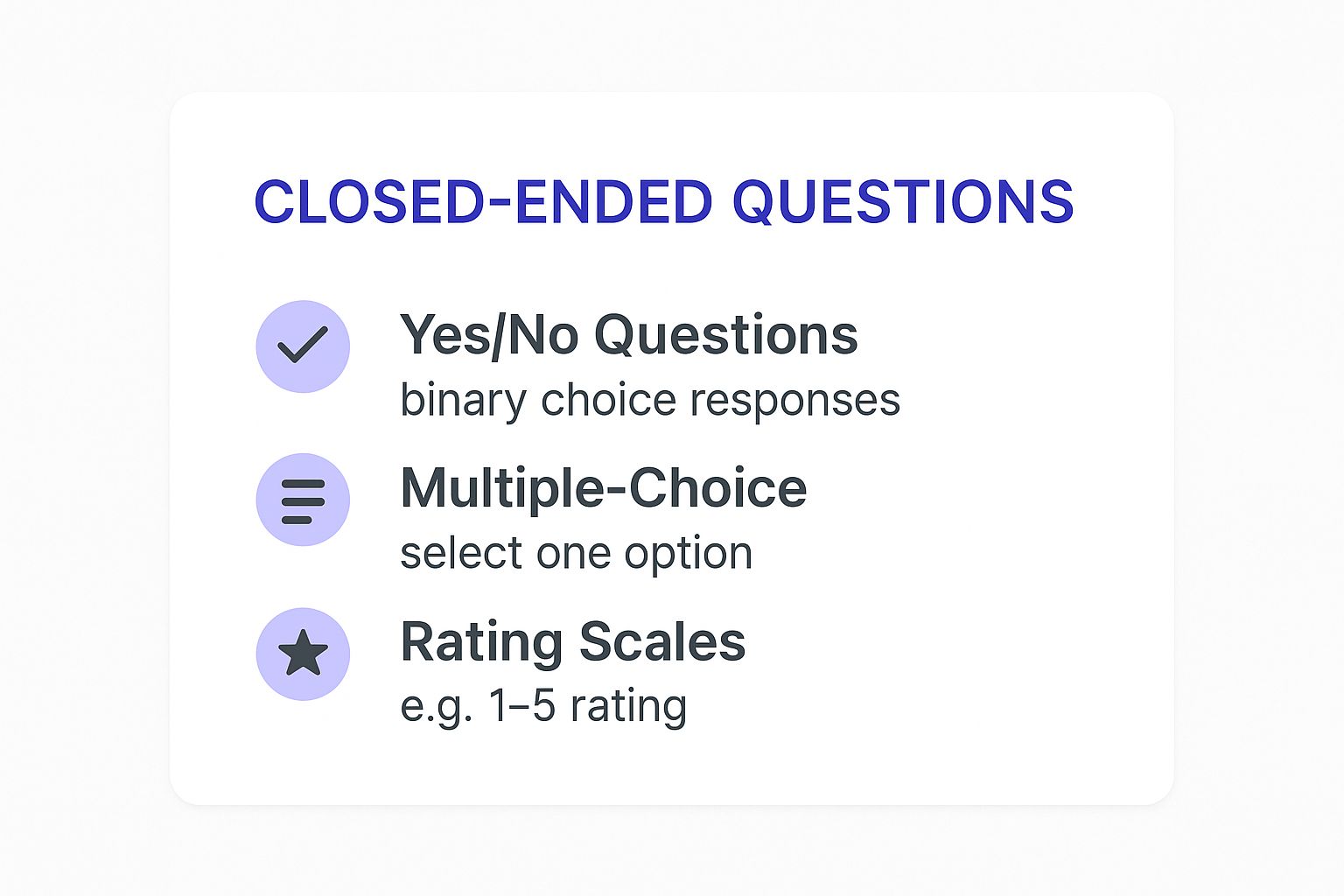

This infographic breaks down some of the most common formats for closed-ended questions.

These structured formats, like yes/no, multiple-choice, and rating scales, are built to capture specific information without asking too much of the person answering.

It's also interesting to note how a question's structure can subtly influence the answers you get. Research shows that the preset answers in a closed-ended question can nudge people's thinking in certain directions. Respondents might be more inclined to pick an answer that feels socially acceptable, which can sometimes mask their true, more nuanced opinions. You can learn more about how question formats shape answers in this 2023 study on respondent behavior.

To make the choice even clearer, let's put the two question types side-by-side.

Closed vs Open Ended Questions at a Glance

This table breaks down the fundamental differences between closed and open ended questions to help you choose the right format for your goals.

AttributeClosed Ended QuestionOpen Ended QuestionData TypeQuantitative (numerical)Qualitative (descriptive)Best ForMeasuring and tracking metricsExploring new ideas and contextAnalysis EffortLow and often automatedHigh and requires interpretationRespondent EffortLow, quick to answerHigh, requires more thought

Ultimately, both question types have their place. The real skill is knowing when to use each one to get the insights you need.

How Closed-Ended Questions Benefit SaaS Surveys

In the fast-moving software industry, getting quick and clear feedback is a top priority. A closed-ended question is a powerful tool here because it respects your user's time. Busy professionals are far more likely to complete a survey they can finish with a few quick clicks, no matter what device they're on.

This speed and simplicity have a direct impact on survey completion rates. Higher completion means more data, which gives you a much more accurate picture of your user base. For more strategies on getting users to hit 'submit', check out our guide on how to https://www.surva.ai/blog/improve-survey-response-rate.

Get Clearer Data for Smarter Decisions

The structured nature of closed-ended questions gives you clean, quantitative data. This makes tracking key SaaS metrics over time incredibly straightforward. You can consistently measure things like Customer Satisfaction (CSAT) and Net Promoter Score (NPS) to see exactly how product changes are landing with your users.

The real power comes from the ability to compare apples to apples. Because the answers are standardized, you can reliably track performance and spot trends without having to interpret subjective feedback.

This consistency is what opens the door to powerful data segmentation. You can effortlessly compare feedback from different user groups, such as new sign-ups versus long-term power users. You might discover that a new feature is a huge hit with trial users but is creating friction for your enterprise clients.

Streamline Your Feedback Analysis

Once you have all this structured data, making sense of it becomes so much simpler. You can quickly turn survey results into charts and graphs that are easy for your entire team to examine. This takes the guesswork out of product development and helps you make decisions backed by solid evidence.

After gathering responses, using effective customer feedback analysis tools is key to pulling actionable insights from your data. With a clear view of what different customer segments are thinking, you can prioritize your product roadmap with confidence. You'll know exactly which features to build, which bugs to squash, and what improvements will deliver the biggest impact on your business.

Types of Closed-Ended Questions (With Examples)

Once you get a feel for the different kinds of closed-ended questions, you can start building surveys that pull in the exact data you need for any situation. Each format has its own job to do, helping you design surveys that are easy for users to answer and even easier for you to analyze.

Let's break down some of the most common types you’ll be using in your SaaS surveys.

Dichotomous Questions

This is the simplest form of a closed-ended question. It’s a direct, two-option choice, usually a simple "Yes" or "No." This format is perfect when you need a clear, definitive answer with no room for maybes.

- Example for SaaS: "Did you find our new knowledge base helpful?"

A question like this gives you an instant binary metric. You can immediately see the percentage of users who found the resource useful, giving you a straightforward data point to track over time.

Multiple-Choice Questions

We’ve all seen these. Multiple-choice questions give respondents a list of options and ask them to pick one (or sometimes more). This format is your go-to when you have a good hunch about the likely answers but want to see which one comes out on top.

One key to a successful multiple-choice question is to make the options mutually exclusive. This prevents confusion and keeps your data clean and easy to interpret.

For instance, you could ask:

- Dashboard Analytics

- Report Generation

- Team Collaboration Tools

- Automated Workflows

This helps you get a clear picture of feature adoption and figure out which parts of your product are the most valuable to your users. If you want to learn more, we've put together a complete list of the different types of survey questions you can use.

Rating Scale Questions

Rating scales are all about measuring how strongly someone feels about something. You're asking users to place their opinion on a numerical scale, like 1 to 5 or 1 to 10. You’ll often see these as Likert scales or star ratings.

These questions are incredibly useful for gauging customer satisfaction or how easy a feature is to use.

- Example for SaaS (Likert Scale): "On a scale of 1 (Very Difficult) to 5 (Very Easy), how easy was our onboarding process?"

Ranking Questions

Finally, we have ranking questions. These ask people to put a list of items in order of preference or importance. This is a powerful way to understand user priorities, especially when you have to make tough calls about your product roadmap.

- Dark Mode

- Mobile App

- Advanced API Access

- Third-Party Integrations

This type of question moves beyond a simple choice. It gives you a clear hierarchy of what your customers want, helping you focus your efforts where they'll have the biggest impact.

How to Write Effective Closed-Ended Questions

Crafting a good closed-ended question is a real skill. You are not just asking something; you are getting clean, unbiased data you can actually trust. If you rush the process, you can easily end up with skewed results that point your product strategy in the wrong direction.

One of the most important rules is to make your answer options mutually exclusive. This just means a respondent can only pick one answer because the choices don't overlap. If someone can fit into multiple categories, your data becomes a mess.

Just as important, your answers need to be collectively exhaustive. This principle means you have covered all the possible answers, so no one is forced to select a response that doesn't fit. Often, this is as simple as adding an "Other (please specify)" or "I don't know" option.

Key Principles for Quality Questions

Beyond structuring the answers, the words you use have a massive impact on the quality of feedback you get. Clear and simple phrasing always wins. You should avoid industry jargon or complex terms that might confuse people or make them second-guess their answers.

Leading questions are another classic mistake. A question like, "How much do you love our amazing new feature?" subtly pushes people toward a positive response. A much better, more neutral way to phrase it would be, "How would you rate your experience with our new feature?"

A well-written closed-ended question feels neutral and objective. It lets the respondent answer honestly without feeling guided toward a specific choice, which is the secret to collecting data you can rely on.

Avoiding Common Mistakes

To get the most value from your surveys, you have to write them well. We've put together a detailed guide on https://www.surva.ai/blog/how-to-write-survey-questions that’s packed with more tips and examples. It's also worth thinking about the overall survey experience from start to finish.

Here are a few more tips to keep in mind:

- Keep It Simple: Use straightforward language. If a question is hard to understand, you're going to get unreliable answers. Simple as that.

- One Idea Per Question: Avoid asking two things at once (e.g., "Was our support fast and helpful?"). Someone might have found it fast but unhelpful, or vice versa. Split them up.

- Order Questions Logically: Kick things off with broad, easy questions and then move to more specific ones. This keeps people engaged and helps prevent survey fatigue.

The science behind creating reliable survey questions is surprisingly detailed. Researchers in the 1990s used a nine-step process, which included focus groups and pilot testing, just to refine questions for a large-scale study on religious attitudes. You can discover more about their methodical approach in this in-depth study from PMC.

Frequently Asked Questions

You've got the basics down, but let's tackle a few common questions that pop up when you start putting closed-ended questions into practice. Getting the strategy right from the start is what separates messy data from clear, actionable insights.

When Should I Use a Closed-Ended Question Instead of an Open-Ended One?

Great question. You'll want to reach for closed-ended questions when you need clean, structured data that you can easily count and analyze. They're perfect for things like measuring customer satisfaction, gathering demographics, or seeing how users feel about a specific set of features. Think of them as your tool for getting the "what."

On the other hand, if you're trying to explore new ideas, dig into the "why" behind a user's choice, or uncover issues you hadn't even thought of, an open-ended question is your best bet.

What Is the Biggest Mistake to Avoid?

The single most common mistake is creating answer options that aren't mutually exclusive or collectively exhaustive. In plain English, this means your choices either overlap, or a person's real answer isn't available as an option at all.

This error is a classic survey-killer. It forces people to pick inaccurate answers, which completely skews your data and can lead you to make some seriously flawed decisions. Always, always double-check that every possible person has one—and only one—clear, correct option to choose from.

Can I Combine Closed and Open-Ended Questions in a Survey?

Absolutely! In fact, this hybrid approach is often the secret sauce for getting the most comprehensive feedback. You can use closed-ended questions to gather the bulk of your quantitative data, making analysis easy.

Then, tack on a final, open-ended question like, "Is there anything else you'd like to share with us?" This simple addition gives you the best of both worlds: structured data for your charts and rich, qualitative stories that bring those numbers to life.

Turn your customer feedback into a growth engine. Surva.ai gives SaaS teams the tools to build intelligent surveys, reduce churn, and collect powerful testimonials. Start making data-driven decisions today with Surva.ai.