Biased Questions in Surveys: Spot and Fix to Improve Data Quality

Learn how biased questions in surveys skew results and how to spot leading, loaded, and ambiguous wording with practical tips.

Ever wonder why your survey results feel slightly off? You know your customers and your product, but the data does not line up with reality. The problem often is not the answers you get, but the questions you ask.

Why Your Survey Questions Might Be Broken

It's a common trap. We craft questions with the best intentions, but subtle biases in our wording can completely derail the results. These flawed questions act like a gentle nudge, guiding respondents toward a certain answer without them even realizing it. Suddenly, you are not getting their genuine thoughts; you are getting a warped reflection of what you wanted to hear.

This is a problem that can poison your entire feedback loop. When you build your product roadmap, marketing strategy, or customer success plan on this kind of skewed data, you are setting yourself up for failure. You might end up chasing the wrong features, misinterpreting churn signals, or pouring resources into solving problems that do not actually exist.

The Real Cost of Bad Questions

The fallout from biased questions goes way beyond a few inaccurate charts. It hits your business where it hurts, impacting your most important metrics and decisions.

- Garbage In, Garbage Out: You think you are gathering priceless customer intelligence, but biased questions just feed you a distorted view of what people truly think and feel.

- Wasted Time and Money: Acting on flawed insights means you are investing your team's energy and your company's budget into the wrong initiatives.

- Eroded Trust: Let's be honest, nobody likes feeling manipulated. If your customers sense a survey is pushing them in a certain direction, it can sour their perception of your brand.

It's a domino effect. When your initial data is compromised by biased questions, even running it through fundamental data summaries like a five-number summary will only give you neatly organized, but ultimately misleading, insights. The bias is baked in from the start.

To build a solid foundation, it helps to know what makes a question effective in the first place. For more on that, check out our guide on what is a good survey question. For now, let's see how you can spot these broken questions and fix them.

Common Types of Question Bias and How to Spot Them

To get clean, reliable data, you first have to recognize the common ways bias sneaks into survey questions. Think of yourself as a detective learning to spot clues. Certain question structures are major red flags that can subtly nudge a respondent away from their true opinion.

Once you can identify these patterns, fixing them becomes second nature. The goal is always to rephrase your questions to be as neutral and direct as possible. This means you’re measuring what your customers actually think, not just how they react to clever wording.

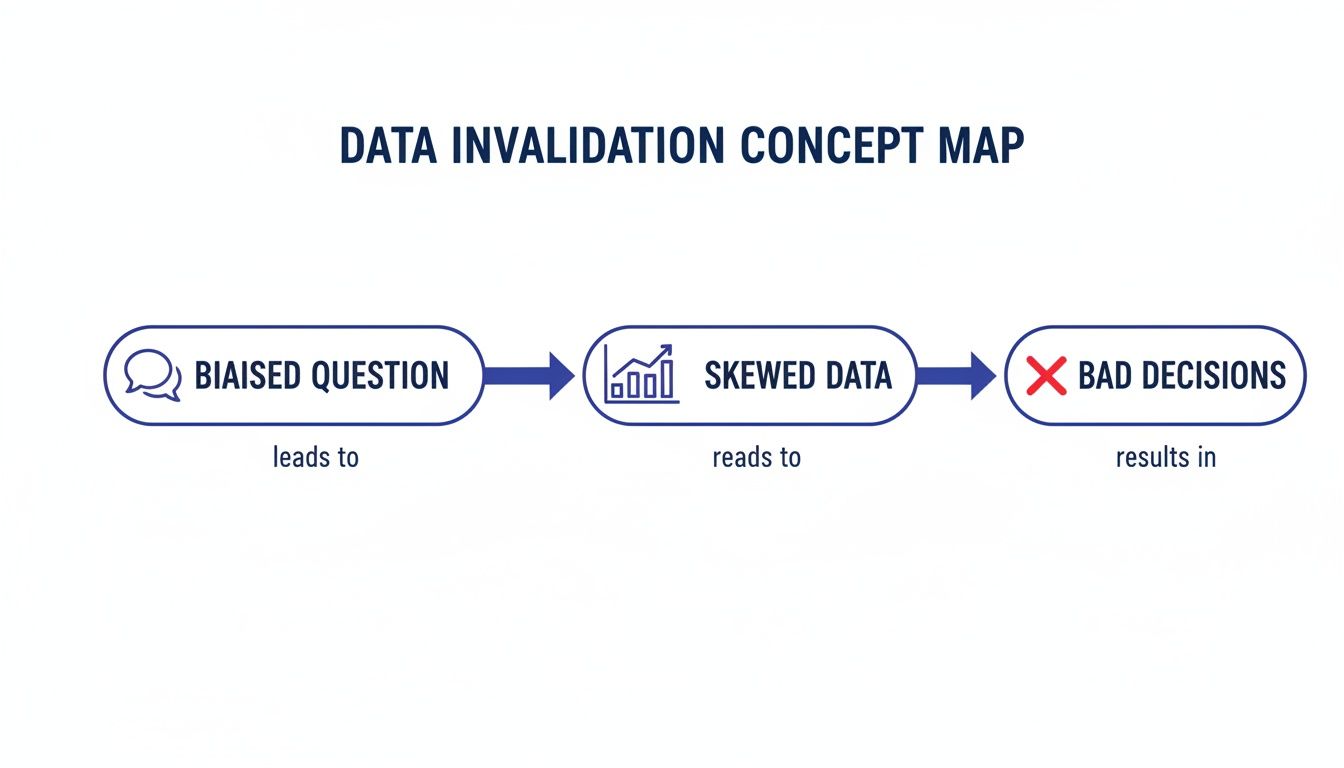

The path from a biased question to a bad business decision is shorter than you think.

As you can see, the integrity of your entire feedback loop hinges on asking neutral questions from the very start.

Leading Questions

A leading question is one of the most common culprits. It uses suggestive language that gently hints at a "correct" or desired answer, basically putting words in the respondent's mouth.

- Biased Example: "How much did you enjoy our new, user-friendly interface?"

- Why It's Biased: Words like "enjoy" and "user-friendly" frame the interface positively. This makes it socially awkward for someone to disagree, pressuring them into giving a more positive rating than they might have otherwise.

- Improved Version: "How would you describe your experience with our new interface?"

The rewrite removes the positive spin and opens the door for a truly honest answer, whether it's good, bad, or somewhere in between.

Loaded Questions

Loaded questions are a bit more deceptive. They bury an unverified assumption about the respondent within the question itself. Answering in any way forces the user to accept that underlying assumption as true, which is both frustrating and a recipe for bad data.

- Biased Example: "What do you find most frustrating about our slow customer support?"

- Why It's Biased: This question assumes the respondent has found customer support to be slow and frustrating. If they have not, there’s no way for them to answer accurately.

- Improved Version: "How would you rate the speed of our customer support?"

The better version measures perception first, without making any assumptions. This allows you to gather genuine feedback instead of just confirming a preconceived notion.

Double-Barreled Questions

This one is a classic survey design mistake. Double-barreled questions ask about two separate things in a single question but only allow for one answer. This makes it impossible for the respondent to answer accurately if they feel differently about each part.

- Biased Example: "Was our pricing clear and was the checkout process easy?"

- Why It's Biased: What if the pricing was perfectly clear, but the checkout process was a nightmare? Or vice versa? A simple "yes" or "no" cannot possibly reflect their experience with both elements.

- "How clear did you find our pricing?"

- "How easy was our checkout process?"

- Misallocated Resources: You might decide to invest less in your support training, thinking your team is knocking it out of the park when they’re actually struggling.

- Hidden Frustrations: Real customer pain points never get resolved because your survey did not provide a safe, neutral space for them to speak up.

- Unexpected Churn: You’re left scratching your head, wondering why customers are leaving when the CSAT scores told you everything was fine.

- High-Context vs. Low-Context Cultures: In many cultures, maintaining harmony is a top priority, which can pump up acquiescence bias. People might agree with you just to be polite, not because they actually share your opinion.

- Directness in Communication: How direct people are also plays a huge role. A question that seems totally neutral in the U.S. might come across as blunt or even rude in Japan, changing how people respond.

- How People Use Rating Scales: Even a simple 1-to-5 scale isn’t universal. Some cultures are more likely to use the extremes ("1" or "5"), while others tend to play it safe and stick to the middle.

- Catch confusing language: What seems perfectly clear in your head might be completely baffling to someone else. Pilot tests are great for flagging confusing jargon or awkward phrasing.

- Check for flow and length: Get honest feedback on the experience. Does the survey drag on forever? Is the question order logical, or does it feel disjointed?

- Identify potential bias: Simply ask your testers if any questions felt like they were pushing them toward a certain answer. A fresh pair of eyes will spot the leading or loaded questions you’ve become blind to.

- Frame questions indirectly. Instead of a confrontational question like, "Why are you unsatisfied with our pricing?" try a softer approach. A better question is, "Which of the following best describes your perception of our pricing?" Then offer options like "It's a great value," "It's priced fairly," or "It's more expensive than I expected."

- Guarantee anonymity. Make it crystal clear that their answers are completely confidential. When people know their identity is protected, they are far more likely to give you honest, unfiltered feedback on sensitive stuff.

- Use scaled questions. Instead of a direct yes/no, a scale can feel less intimidating. For example, "On a scale of 1 to 5, how comfortable are you with our current pricing?" allows for a more nuanced answer and feels less direct.

Splitting it into two distinct questions lets you get specific, actionable feedback on each part of the user journey. The impact of these flawed questions is huge. Research shows they can inflate agreement by 16% in B2B surveys simply by confusing people.

How Question Bias Distorts Key SaaS Metrics

This is not just some theoretical problem. It directly hammers the metrics you rely on to measure success.

Imagine your Net Promoter Score (NPS) suddenly shoots up. On the surface, that looks like a huge win. But what if the survey question was something like, "How likely are you to recommend our fantastic, industry-leading software to a friend?"

That’s a leading question, and it’s basically nudging users to give you a higher score. Your team might be high-fiving over an inflated NPS, all while deep-seated customer dissatisfaction is flying completely under the radar.

This disconnect gives you a false sense of security. You end up delaying important product updates or missing the early warning signs of churn because, hey, the numbers look great.

How Bias Skews Customer Satisfaction Scores

Customer Satisfaction (CSAT) scores are another prime victim of survey bias. A question like, "How satisfied were you with our quick and helpful support team?" is not neutral. It sets a positive expectation right from the start. This kind of framing can artificially inflate your CSAT scores, painting a rosy picture of customer happiness that just isn’t real.

Relying on a high CSAT score from a biased question can lead to a world of hurt:

The real danger of biased questions is that they hide the truth. A tiny tweak in how you phrase a question can be the difference between spotting a churn risk and losing a valuable customer for good.

The True Cost of Inaccurate Churn Signals

Cancellation surveys are your last, best chance to figure out why a customer is walking away. But if that final touchpoint is full of biased questions, you get garbage feedback right when you need clarity the most.

Take this loaded question in a cancellation flow: "Did you find our advanced features too complex to use?" This question assumes the user’s problem was complexity, forcing them down a specific feedback path. It slams the door on discovering the real reason they left, which could have been anything from pricing to a competitor’s slick new offer or a missing integration.

Without clean, unbiased feedback, you cannot accurately diagnose the root causes of churn. You could waste months "simplifying" features that were never the problem in the first place, while the actual issues continue to drive more customers out the door.

Fixing biased survey questions isn’t just about data purity. It's a business imperative for sustainable growth.

The Sneaky Influence of Culture and Social Desirability

Sometimes, the way you word a question is perfect, but the answers you get are still skewed. This happens because of subtle human tendencies that are hard to shake, like our deep-seated need to be liked and to agree with others. Two of the biggest culprits here are social desirability and acquiescence bias.

Social desirability bias is that natural human impulse to present ourselves in the best possible light. When people answer survey questions, especially on sensitive topics, they often choose the answer that makes them look good or seems socially acceptable, rather than the one that’s 100% true.

Then there’s acquiescence bias, often called "yea-saying." This is the tendency for people to agree with statements, regardless of what they actually say. It can happen when they’re unsure, trying to be agreeable, or just rushing through a survey. Both of these biases can quietly corrupt your data without you even realizing it.

How Social Norms Mess With Survey Answers

Let’s imagine you are surveying users about their productivity habits. You might ask something like, "Do you frequently multitask to maximize your workday?" You will probably get a ton of "yes" answers.

Why? Because in many professional cultures, multitasking is hailed as a superpower. People will agree with the statement to align themselves with that ideal, even if their real work habits look more like chaotic context-switching. This creates a huge gap between what people actually do and what they say they do.

When respondents are more focused on looking good than being honest, you're not measuring reality. You're measuring their perception of social expectations.

One of the best ways to fight this is to guarantee privacy. When users know their feedback is confidential, the pressure to give the "right" answer drops. For a deeper look, check out our guide on how to create anonymous surveys and get more honest feedback.

The Problem Gets Bigger with Global Surveys

These biases get even trickier when you’re surveying people across different countries and cultures. What’s considered polite or socially desirable in one place can be completely different somewhere else. A one-size-fits-all survey will almost certainly fail because it does not account for these cultural nuances, leading to some seriously flawed global strategies.

This is not just a minor detail. Research shows that acquiescence bias varies wildly by country. For example, online surveys in Latin America can show agreement rates 20-30% higher than what you would expect. An Ipsos study found that unadjusted satisfaction ratings were inflated by 12-18% in high-acquiescence regions compared to Nordic countries. You can get the full picture by learning more about how cultural differences affect survey data.

If you ignore these cultural factors, you could end up making major strategic decisions based on data that simply isn’t comparable from one market to the next.

A Practical Toolkit for Preventing Survey Bias

Spotting bias is one thing, but stopping it before it starts is the real goal. The good news? You do not need to be a data scientist to build a more objective survey. A few smart pre-launch checks can catch the vast majority of mistakes before they ever have a chance to mess with your data.

Think of it as your quality control process for getting feedback you can actually trust.

This toolkit is all about simple, effective methods you can put into practice right away to keep biased questions from skewing your results.

Start with Pilot Testing

Before you blast your survey out to hundreds or thousands of people, do a small pilot test first. This just means sharing the survey with a small, diverse group, even just a few colleagues from different departments, to see how they actually interpret the questions.

It’s amazing what you will find.

Use A/B Testing to Refine Questions

If you’re on the fence about whether a question is truly neutral, A/B testing is your best friend.

It’s pretty straightforward. Create two versions of the same question. One is the original you suspect might be biased, and the other is a rewritten, more neutral alternative. Then, you send each version to a different, random segment of your audience.

If you see a major difference in the responses between version A and version B, that’s a huge red flag. It’s a clear sign the original phrasing was nudging people in a specific direction. This approach lets the data tell you which wording gets the most honest feedback.

Add a Final Polish

A couple of final checks can make a world of difference. First, run your questions through a readability calculator. Tools like the Flesch-Kincaid test can quickly tell you if your language is too complex, helping you simplify things for better, faster comprehension.

Also, think about how cultural response styles might color your results, especially with rating scales. For example, a new product concept might score a 72/100 in Japan but only a 45/100 in Germany, purely due to different cultural norms around expressing enthusiasm or criticism. This kind of variation can inflate "global" data by 14% on average, so always keep your audience's background in mind.

Beyond just tweaking your questions, a solid grasp of different strategies for collecting customer feedback is key to making sure the data you collect is sound from start to finish.

Answering Your Questions About Survey Bias

Even with a solid plan, a few questions about survey bias always seem to pop up. It is one thing to know the theory, but putting it into practice can feel like navigating a minefield.

Let's clear up some of the gray areas with direct answers to the questions our teams hear most often.

Is It Ever Okay to Use a Leading Question?

I get this question a lot, and the answer is almost always no. It is tempting to think a slightly leading question can "warm up" a respondent or confirm a hunch, but it almost always backfires. The moment you introduce a leading phrase, you are no longer measuring someone's true opinion. You are just measuring their reaction to your suggestion.

Think about it this way. Asking, "How much did our new feature improve your workflow?" is a shortcut that assumes it was an improvement. A neutral question like, "How has our new feature impacted your workflow?" gives them space to say it made things better, worse, or had no impact at all. The goal is truth, not just validation.

Any data gathered from a leading question is fundamentally compromised. It’s better to risk a neutral or even negative response than to collect positive feedback you can't trust.

At the end of the day, the only feedback that leads to smart business decisions is the kind that reflects genuine user sentiment.

How Can I Ask About Sensitive Topics Neutrally?

Asking about things like pricing, satisfaction with a manager, or reasons for canceling a subscription requires a delicate touch. The key is to create a sense of psychological safety and neutrality. A biased question on a sensitive topic can feel accusatory or judgmental, causing people to either shut down or give you the "right" answer instead of the real one.

Here are a few ways to approach it:

How Do I Train My Team to Avoid Bias?

Getting your whole team on the same page about recognizing and avoiding biased questions is one of the best things you can do for your data quality. It is less about memorizing rules and more about developing a mindset of objectivity.

Start by building a simple resource library. Create a "cheat sheet" of the usual suspects like leading, loaded, and double-barreled questions, with clear before-and-after examples that are relevant to your product. From there, hold a quick workshop where the team reviews real surveys (both good and bad) and talks through why certain questions work and others fall flat.

Finally, make peer review a non-negotiable part of your process. Before any survey goes live, have at least one other person review the questions with the specific goal of hunting for bias. This simple step not only catches errors but also reinforces good habits over time.

Ready to build surveys that deliver clear, unbiased insights? Surva.ai gives you the tools to create neutral questions, test your survey flows, and analyze feedback with AI-powered clarity. Start collecting data you can trust at https://www.surva.ai.